Mozilla Data Documentation

This documentation was written to help Mozillians analyze and interpret data collected by our products, such as Firefox and Mozilla VPN. Mozilla refers to the systems that collect and process this data as Telemetry.

At Mozilla, our data-gathering and data-handling practices are anchored in our Data Privacy Principles and elaborated in the Mozilla Privacy Policy. You can learn more about what data Firefox collects and the choices you can make as a Firefox user in the Firefox Privacy Notice.

If there's information missing from these docs, or if you'd like to contribute, see this article on contributing, and feel free to file a bug here.

You can locate the source for this documentation in the data-docs repository on GitHub.

Using this document

This documentation is divided into the following sections:

Introduction

This section provides a quick introduction to Mozilla's Telemetry data: it should help you understand how this data is collected and how to begin analyzing it.

Cookbooks & Tutorials

This section contains tutorials presented in a simple problem/solution format, organized by topic.

Data Platform Reference

This section contains detailed reference material on the Mozilla data platform, including links to other resources where appropriate.

Dataset Reference

In-depth references for some of the major datasets we maintain for our products.

For each dataset, we include a description of the dataset's purpose, what data is included, how the data is collected, and how you can change or augment the dataset. You do not need to read this section end-to-end.

Historical Reference

This section contains some documentation of things that used to be part of the Mozilla Data Platform, but are no longer. You can generally ignore this section, it is intended only to answer questions like "what happened to X?".

You can find the fully-rendered documentation here, rendered with mdBook, and hosted on Github pages.

Introduction

This section is an introductory guide to analyzing Telemetry data: it should give you enough knowledge and understanding to begin exploring our systems. After reading through this section, you can look through the tutorials, which has more specific guides on performing particular tasks.

What Data does Mozilla Collect?

Mozilla, like many other organizations, relies on data to make product decisions. However, unlike many other organizations, Mozilla balances its goal of collecting useful, high-quality data with giving its users meaningful choice and control over their own data. Our approach to data is most succinctly described by the Mozilla Privacy Principles. If you want to know what Mozilla thinks about data, the Principles will tell you that.

From those principles come Mozilla's Privacy Notices. They differ from product to product because the data each product deals with is different. If you want to know what kinds of data each Mozilla product collects and what we do with it, the Privacy Notices will tell you that.

From the Principles and the Notices Mozilla derives operational processes to allow it to make decisions about what data it can collect, store, and publish. Here are a few of them:

- Data Collection: Mozilla's policies around data collection

- Data Publishing: How Mozilla publishes (a subset of) of the data it collects for the public benefit

If you want to know how we ensure the data Mozilla collects, store, and publish abide by the Privacy Notices and the Principles, these processes will tell you that.

The data Mozilla collects can roughly be categorized into three categories: product telemetry, usage logs and website telemetry.

Product Telemetry

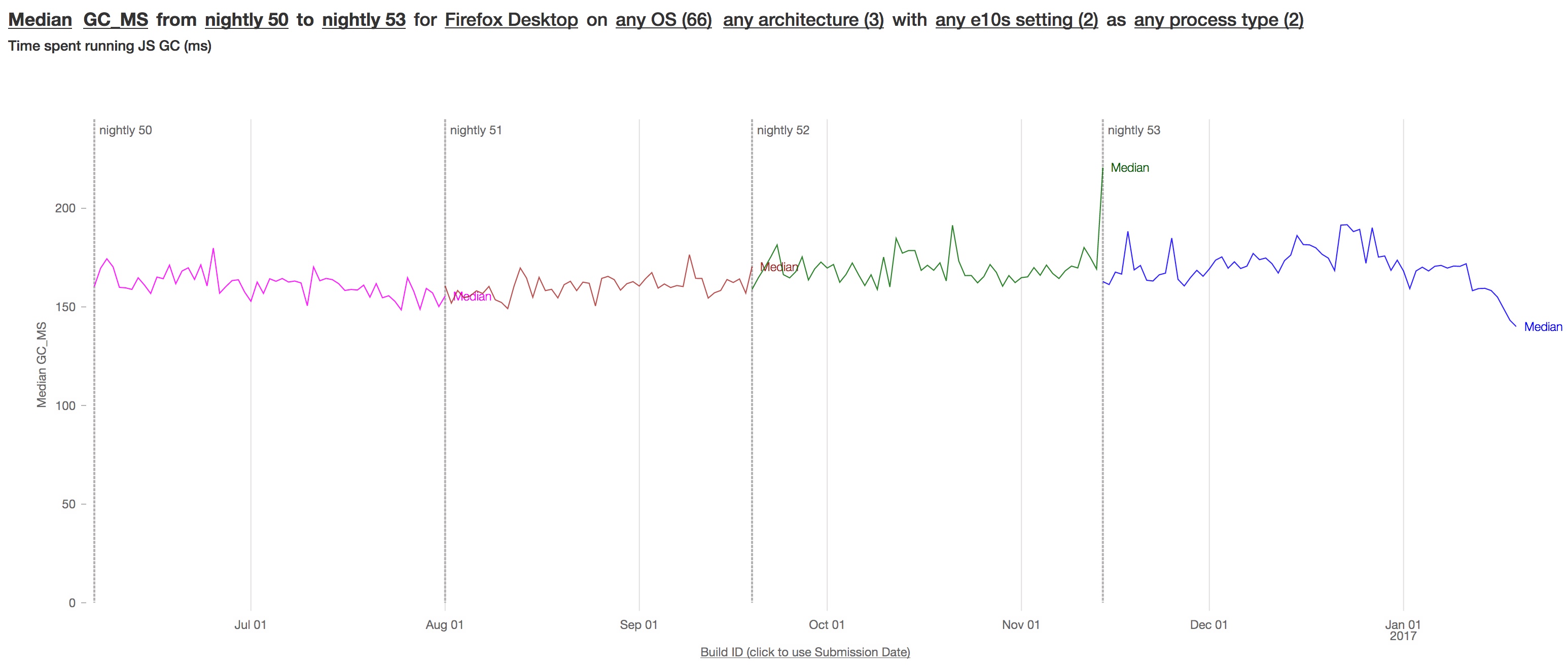

Most of our products, including Firefox, are instrumented to send small JSON packets called "pings" when telemetry is enabled. Pings include some combination of environment data (e.g., information about operating system and hardware), measurements (e.g., for Firefox, information about the maximum number of open tabs and time spent running in JavaScript garbage collections), and events (indications that something has happened).

Inside Firefox, most Telemetry is collected via a module called "Telemetry". The details of our ping formats is extensively documented in the Firefox Source Docs under Toolkit/Telemetry.

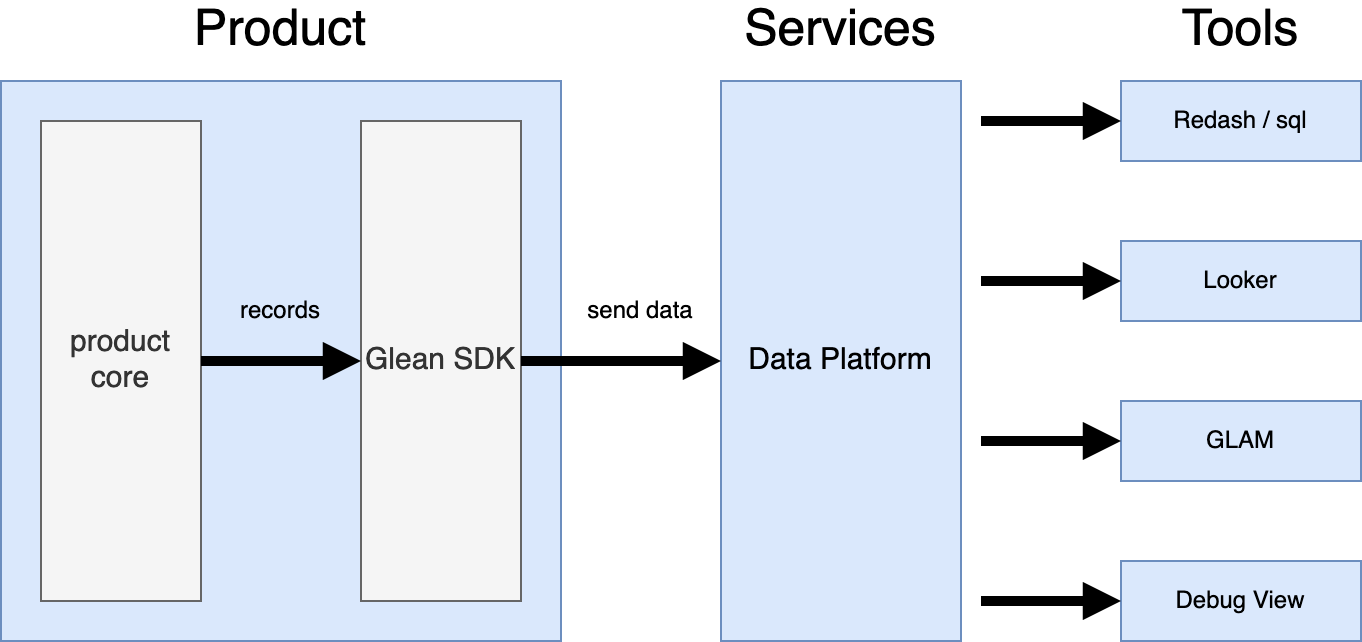

In newer products like Firefox for Android, instrumentation is handled by the Glean SDK, whose design was inspired from what Mozilla learned from the implementation of the Telemetry module and has many benefits. At some point in the near future, Mozilla plans to replace the Telemetry module with the Glean SDK. For more information, see Firefox on Glean (FOG).

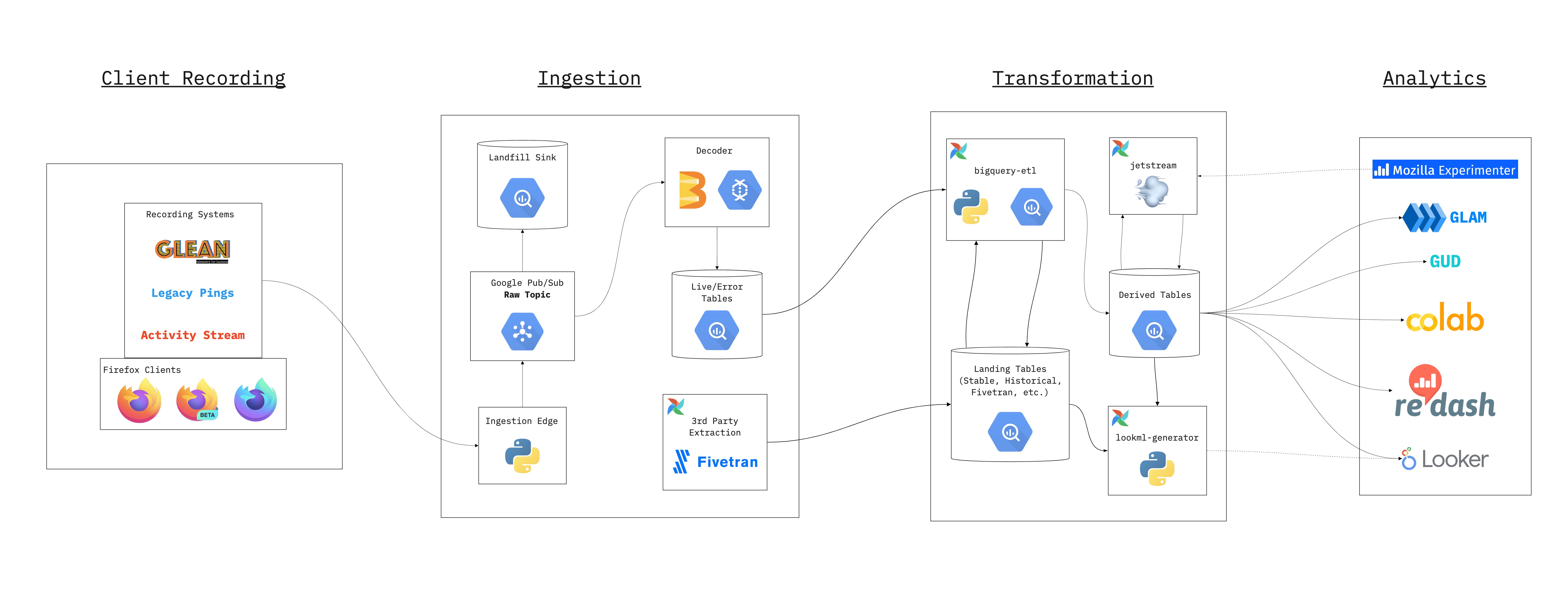

When ping submissions from our clients hit our end points, they are aggregated and stored into ping-level datasets. On a daily basis, the information in these pings datasets is summarized and transformed into derived datasets which are easier to reason about and faster to query. You can learn more about this in Guiding Principles for Data Infrastructure.

Both the ping and derived datasets are viewable using tools like GLAM and Looker. For more information, see Tools for Data Analysis.

Usage Logs

Some of our products, like Firefox Sync, produce logs on the server when they are used. For analysis purposes, we take this log data, strip it user identifiers and summarize it into derived datasets which can be queried with either BigQuery or Looker. As with product telemetry, this data can be helpful for understanding how our products are used. For example, it can tell us how many people from a particular locale are engaging with a particular service.

Website Telemetry

Mozilla uses tools like Google Analytics to measure interactions on our web sites like mozilla.org. To facilitate comparative analysis with product and usage telemetry, we export much of this data into our Data Warehouse, so that it can viewed with Looker and other tools.

Tools for Data Analysis

This is a starting point for making sense of the tools used for analyzing Mozilla data. There are different tools available, each with their own strengths, tailored to a variety of use cases and skill sets.

High-level tools

These web-based tools do not require specialized technical knowledge (e.g. how to write an SQL query, deep knowledge of BigQuery). This is where you should start.

Looker

In 2020, Mozilla chose Looker as its primary tool for analyzing data. It allows data exploration and visualization by experts and non-experts alike.

For a brief introduction to Looker, see Introduction to Looker.

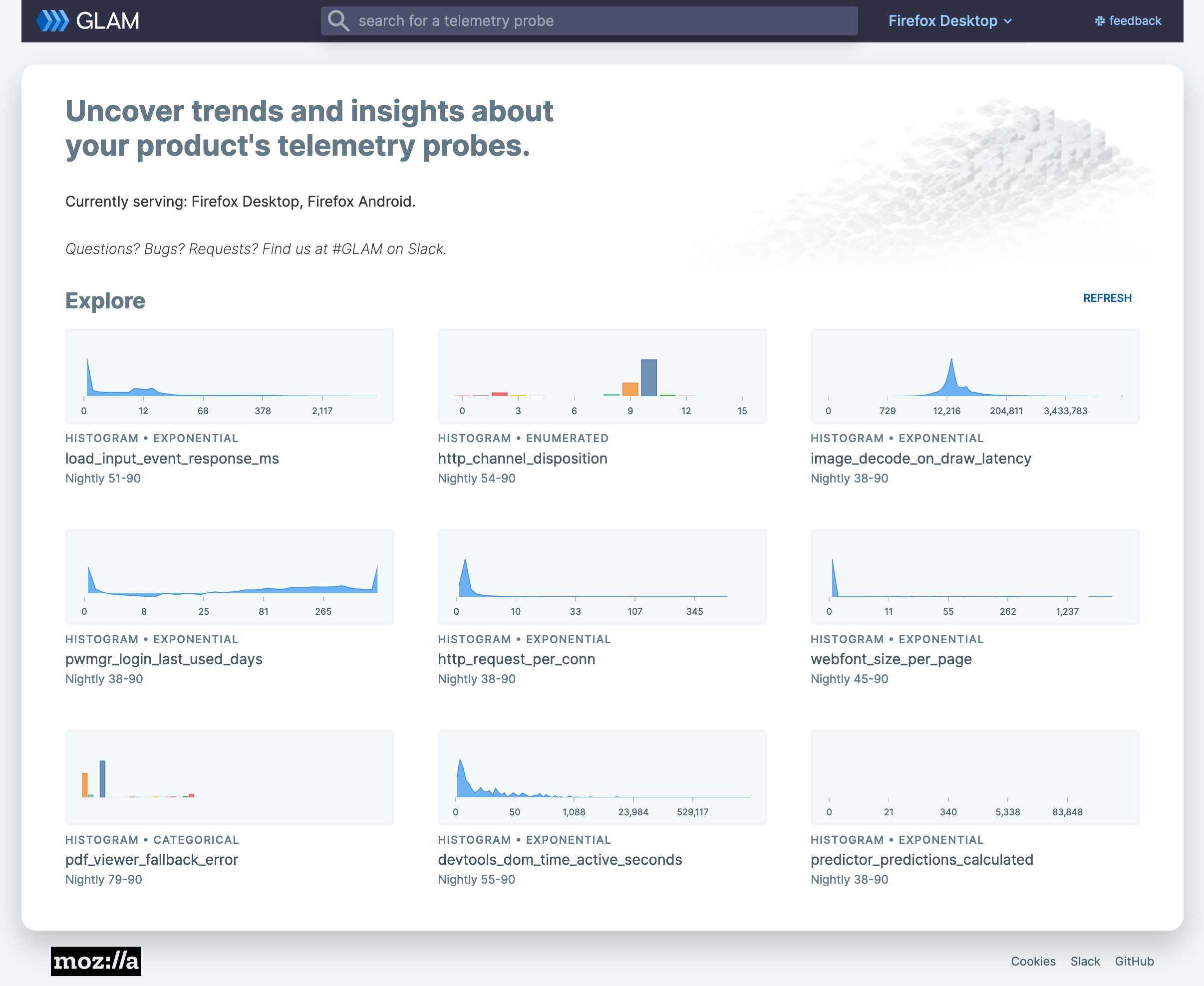

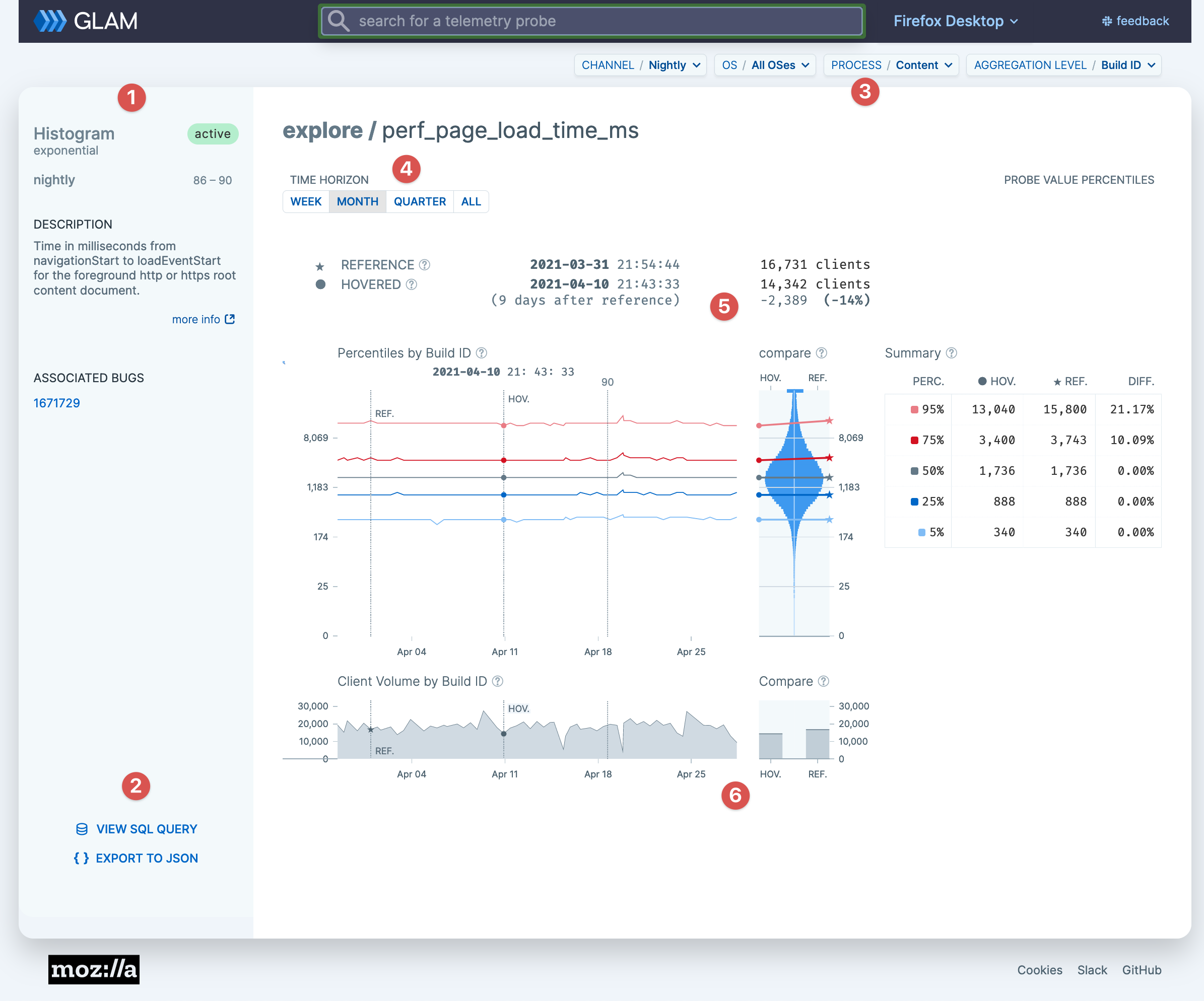

Glean Aggregated Metrics Dashboard (GLAM)

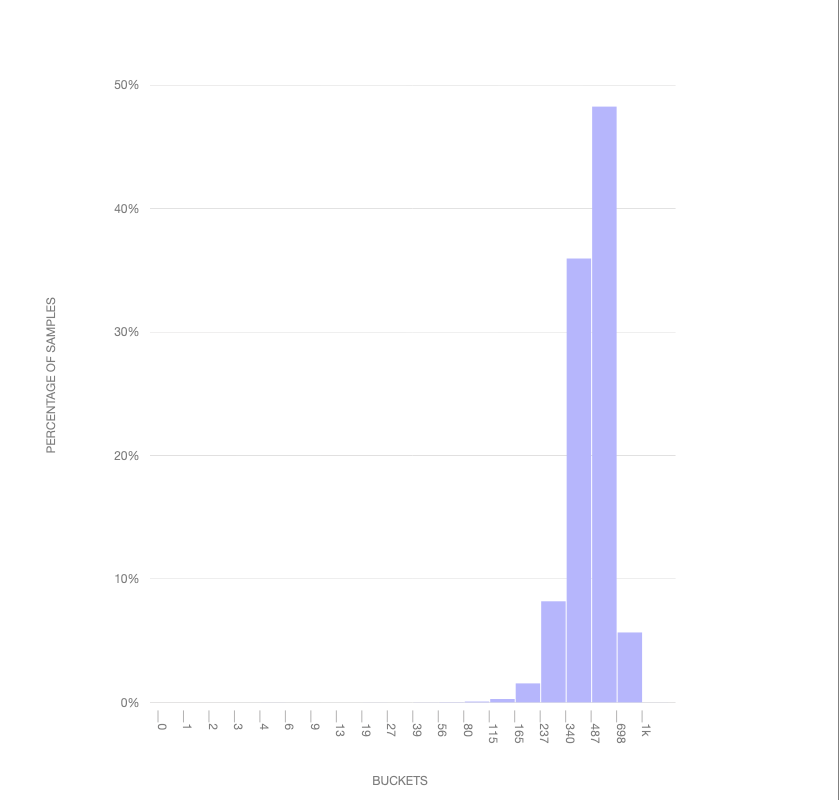

The Glean Aggregated Metrics Dashboard (GLAM) is an interactive dashboard that is Mozilla’s primary self-service tool for examining the distributions of values of specific individual telemetry metrics, over time and across different user populations. It is similar to GUD in that it is meant to be usable by everyone; no specific data analysis or coding skills are needed. But while GUD is focused on a relatively small number of high level, derived product metrics about user engagement (e.g. MAU, DAU, retention, etc) GLAM is focused on a diverse and plentiful set of probes and data points that engineers capture in code and transmit back from Firefox and other Mozilla products.

For more information on how to use GLAM, see Introduction to GLAM.

Lower-level tools

These tools require more specialized knowledge to use.

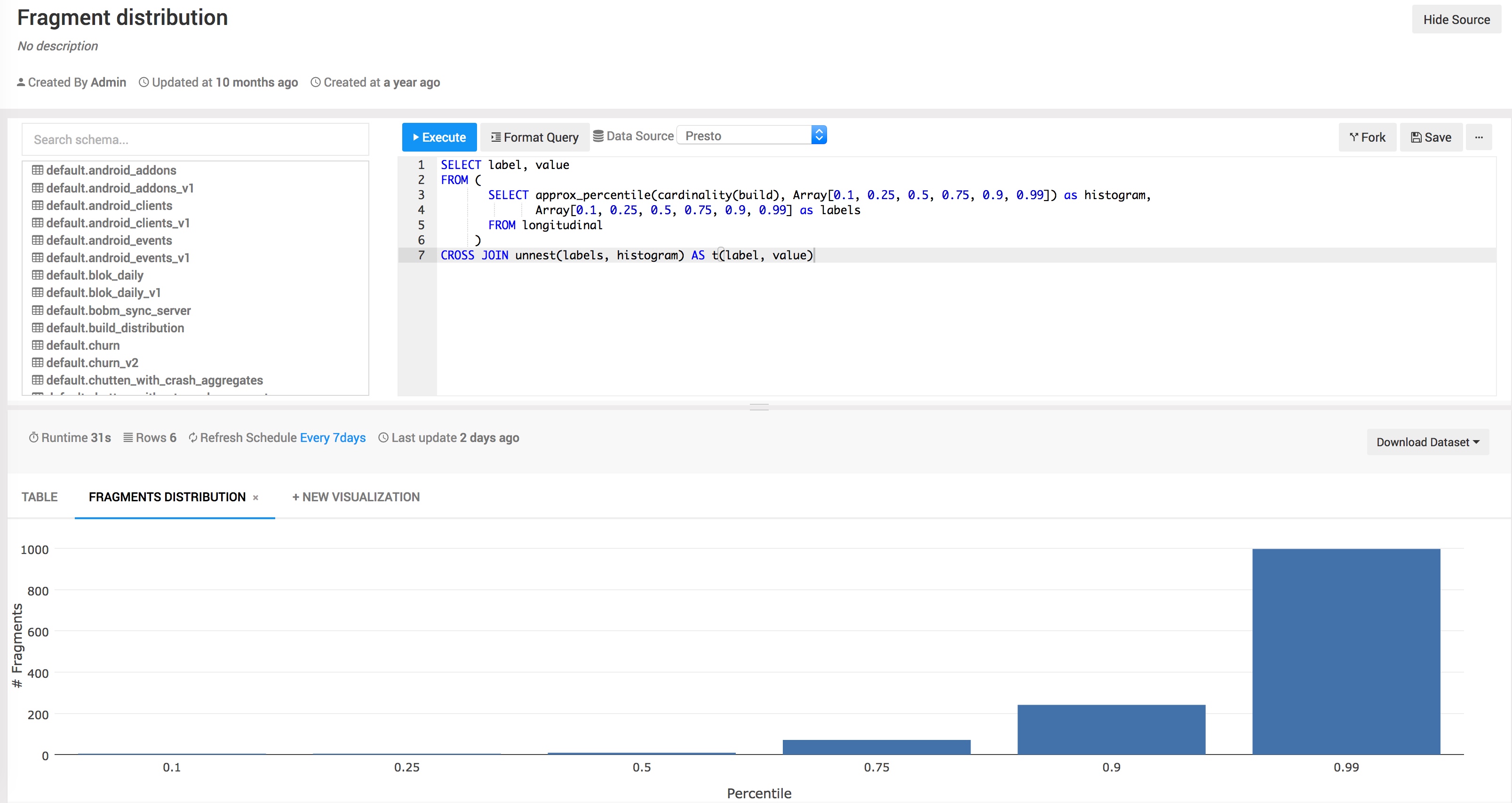

sql.telemetry.mozilla.org (STMO)

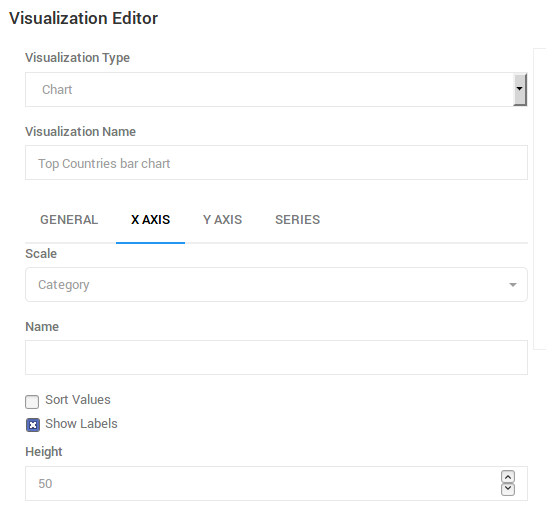

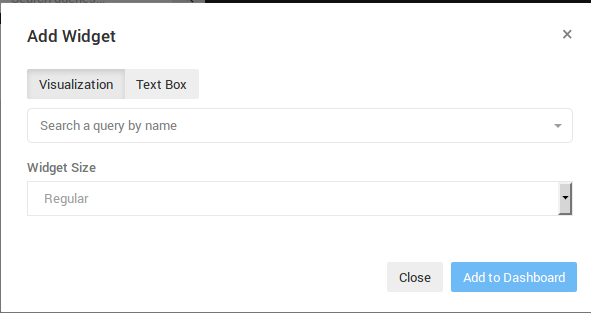

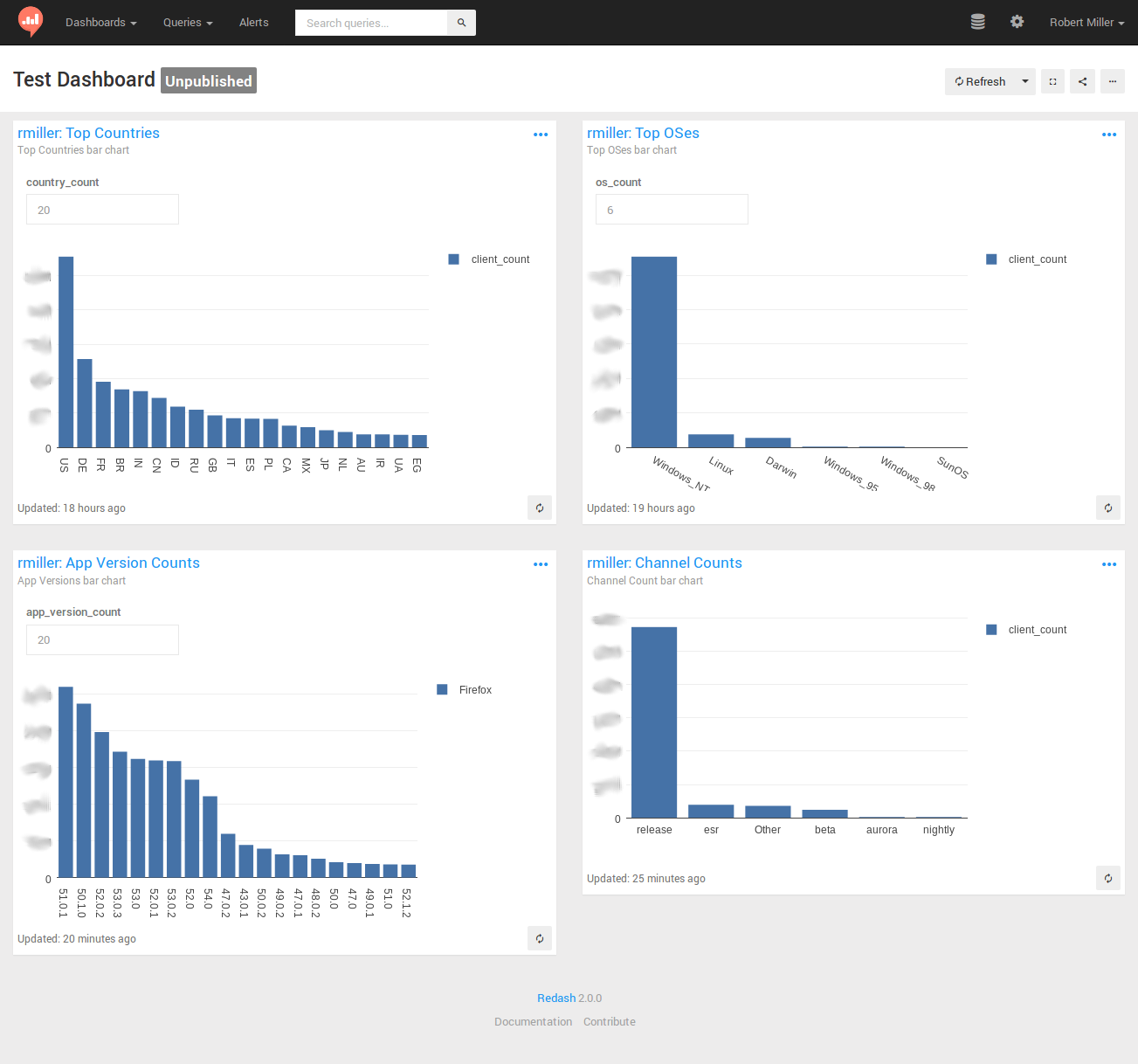

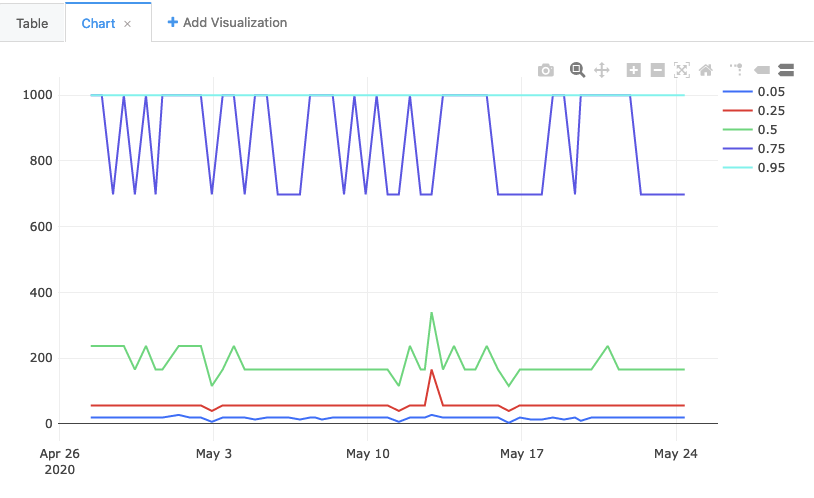

The sql.telemetry.mozilla.org (STMO) site

is an instance of the very fine Redash software, allowing

for SQL-based exploratory analysis and visualization / dashboard

construction.

Requires (surprise!) familiarity with SQL, and for your data to be explicitly exposed as an STMO data source.

You can learn more about how to use it in Introduction to STMO.

Note that while STMO is not yet considered deprecated, Looker is the preferred solution for producing data visualizations and dashboards at Mozilla (where possible).

Deprecated tools

These tools are still available, but are generally not recommended.

Telemetry Measurement Dashboard

The Telemetry Measurement Dashboard (TMO) site is the 'venerable standby' of Firefox telemetry analysis tools. It is the predecessor to GLAM (see above) and is still lightly maintained until we are sure that GLAM covers all of its use cases.

Terminology

Table of Contents

This glossary provides definitions for some common terms used in the Mozilla data universe.

If you're new to Mozilla, you may also find the general glossary on wiki.mozilla.org helpful.

- AET

- Analyst

- Amplitude

- BigQuery

- Build ID

- Client ID

- Data Analyst

- Data Engineer

- Data Practitioner

- Data Scientist

- Dataset

- DAU

- Derived Dataset

- Glean

- GCP

- GeoIP

- Ingestion

- Intensity

- KPI

- Metric

- MAU

- Ping

- Ping Table

- Pipeline

- Probe

- Profile

- Query

- Retention

- Schema

- Session

- Subsession

- STMO (sql.telemetry.mozilla.org)

- Telemetry

- URI

- URL

- WAU

AET

Account Ecosystem Telemetry (never fully launched); see the PRD

Analyst

See Data Analyst.

Amplitude

A third-party product formerly used by several teams within Mozilla for analysis of user events.

BigQuery

BigQuery is Google Cloud's managed data warehouse. Most of the data described on this site is stored and queried using BigQuery. See Accessing and working with BigQuery for more details.

Build ID

A unique identifier for a build like 20210317095331.

Often used to identify and aggregate telemetry submitted by specific versions of our software.

Note that the format may differ across product lines.

Client ID

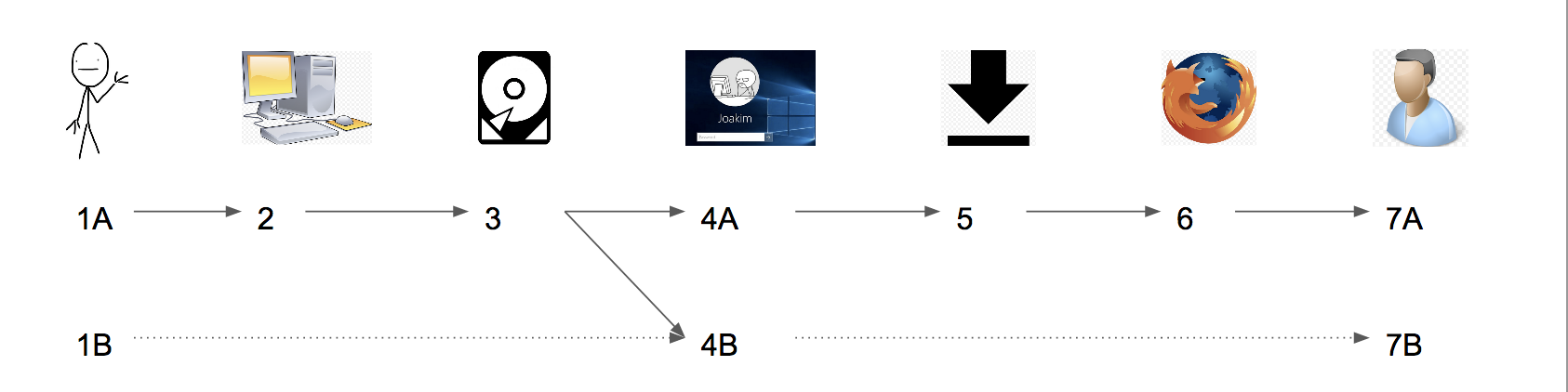

A unique id identifying the client who sent a ping.

Data Analyst

This is a common job title for someone who spends a large amount of their time analyzing data. At Mozilla, we tend not to use this term or title, favoring Data Practitioner or Data Scientist instead.

Data Engineer

A "Data Engineer" at Mozilla generally refers to someone on the Data Engineering team. They implement and maintain the data platform and tools described in this document. They may also assist data scientists or other data practitioners, as needed.

Data Practitioner

A data practitioner is someone who looks at data, identifies trends and other qualitative measurements in them, and creates charts and dashboards. It could be anyone: engineer, product manager, data engineer or data scientist.

Data Scientist

A "Data Scientist" at Mozilla generally refers to someone on the Data Science team. They have a broad array of technical backgrounds and a core set of common professional skills:

- applying statistical methods to noisy data to answer questions about what, how, or why something is happening

- transform unstructured data into usable metrics and models

- augmenting strategic product and decision-making with empirical evidence created and curated by the team

Dataset

A set of data, which includes ping data, derived datasets, etc.; sometimes it is used synonymously with “table”; sometimes it is used technically to refer to a BigQuery dataset, which represents a container for one or more tables.

DAU

Daily Active Users - The number of unique client ids that are active each day.

For more details, see the DAU Metric page on Confluence.

Derived Dataset

A processed dataset, such as Clients Daily. At Mozilla, this is in contrast to a raw ping table which represents (more or less) the raw data submitted by our users.

Glean

Glean is Mozilla’s product analytics & telemetry solution that provides a consistent experience and behavior across all of our products. Most of Mozilla's mobile apps, including Fenix, have been adapted to use the Glean SDK. For more information, see the Glean Overview.

GCP

Google Cloud Platform (GCP) is a suite of cloud-computing services that runs on the same infrastructure that Google uses internally for its end-user products.

GeoIP

IP Geolocation involves attempting to discover the location of an IP address in the real world. IP addresses are assigned to an organization, and as these are ever-changing associations, it can be difficult to determine exactly where in the world an IP address is located. Mozilla’s ingestion infrastructure attempts to perform GeoIP lookup during the data decoding process and subsequently discards the IP address before the message arrives in long-term storage.

Ingestion

Mozilla's core data platform has been built to support structured ingestion of arbitrary JSON payloads whether they come from browser products on client devices or from server-side applications that have nothing to do with Firefox; any team at Mozilla can hook into structured ingestion by defining a schema and registering it with pipeline. Once a schema is registered, everything else is automatically provisioned, from an HTTPS endpoint for accepting payloads to a set of tables in BigQuery for holding the processed data.

Intensity

Intuitively, how many days per week do users use the product? Among profiles active at least once in the week ending on the date specified, the number of days on average they were active during that one-week window.

KPI

Key Performance Indicator - a metric that is used to measure performance across an organization, product, or project.

Metric

In general: a metric is anything that you want to (and can) measure. This differs from a dimension which is a qualitative attribute of data.

In the context of Glean, a metric refers to an instrumented measure for a specific aspect of the product (similar to a probe in Firefox Telemetry).

MAU

Monthly Active Users - the number of unique profiles active in the 28-day period ending on a given day. The number of unique profiles active at least once during the 28-day window ending on the specified day.

Ping

A ping represents a message that is sent from the Firefox browser to Mozilla’s Telemetry servers. It typically includes information about the browser’s state, user actions, etc. For more information, see Common ping format.

Ping Table

A set of pings that is stored in a BigQuery table. See article on raw ping datasets.

Pipeline

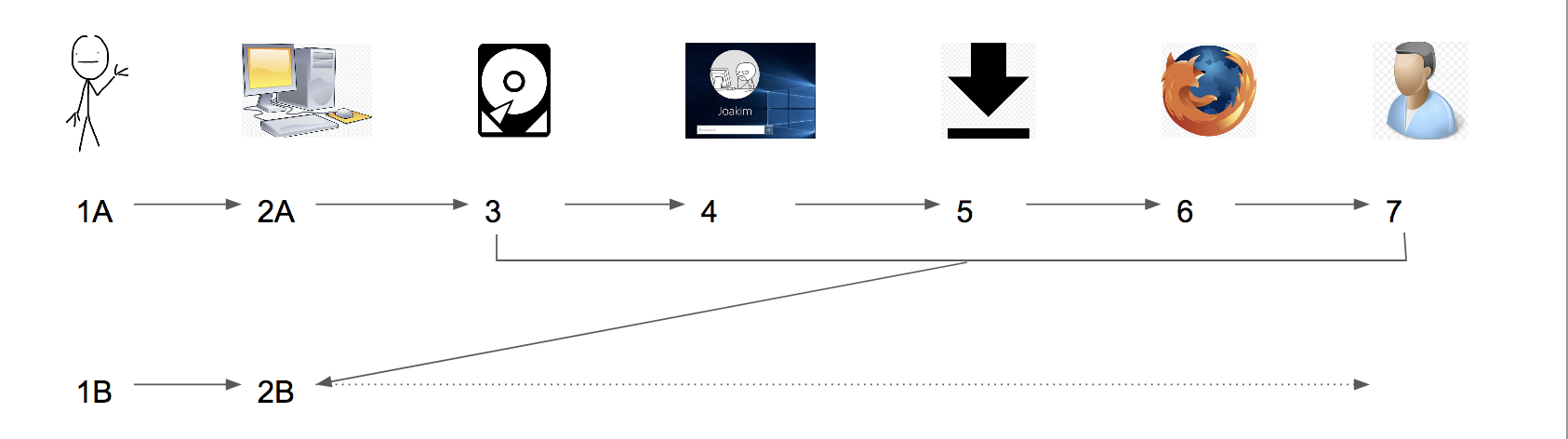

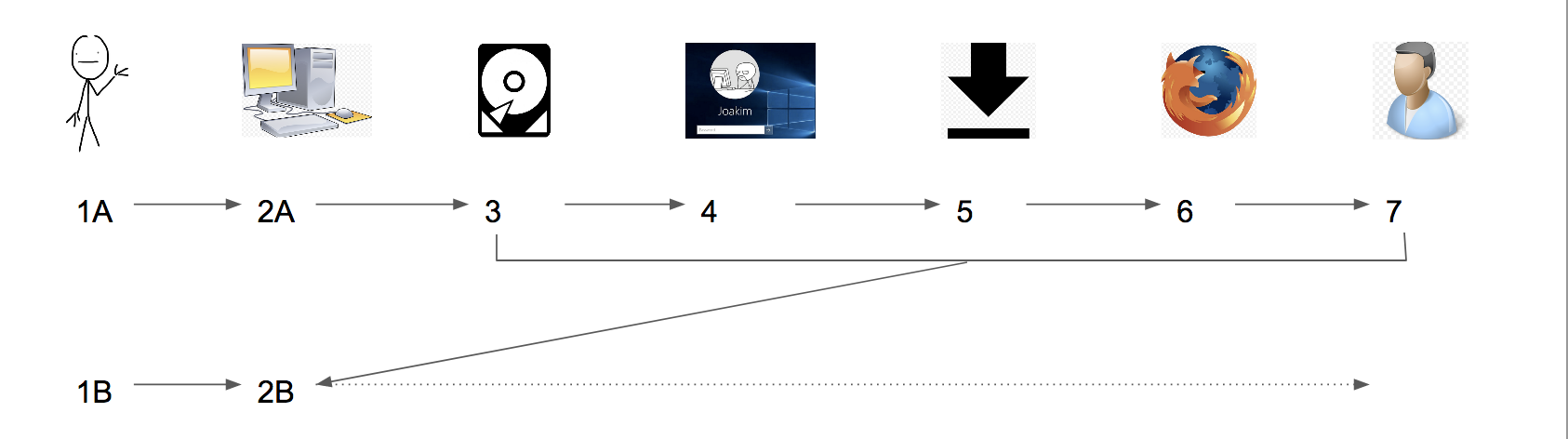

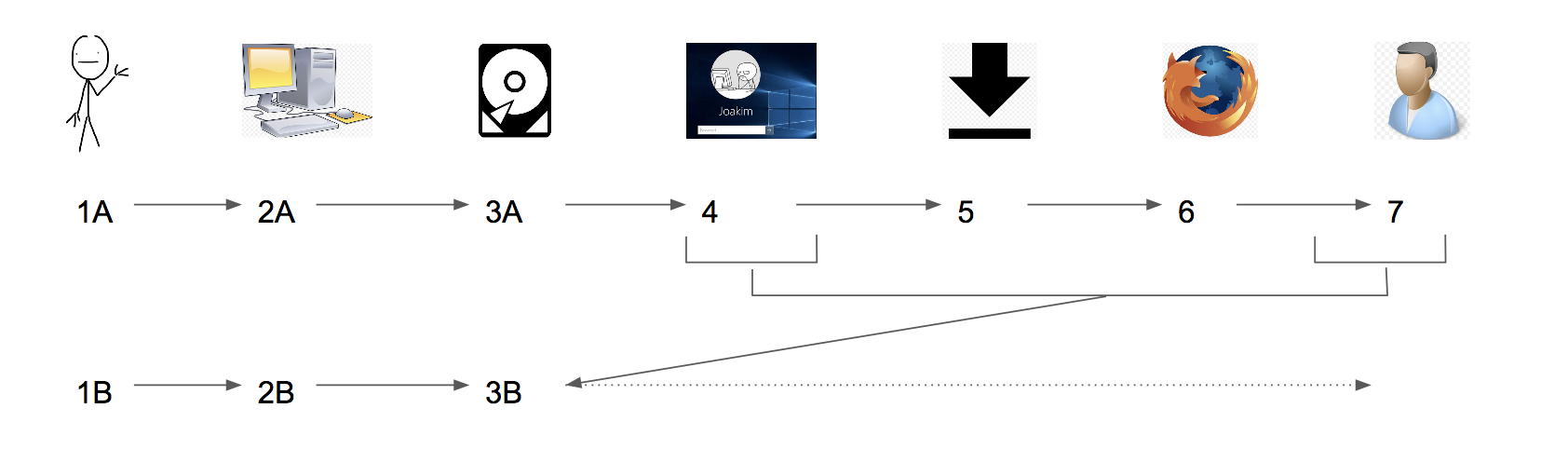

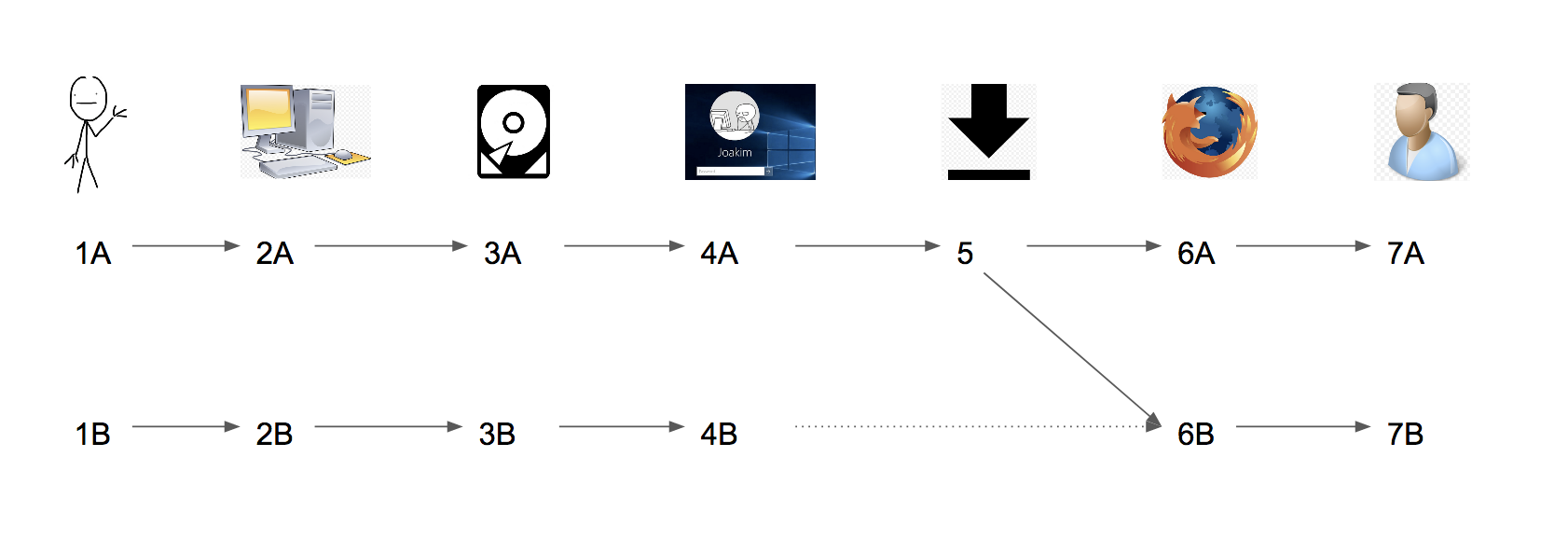

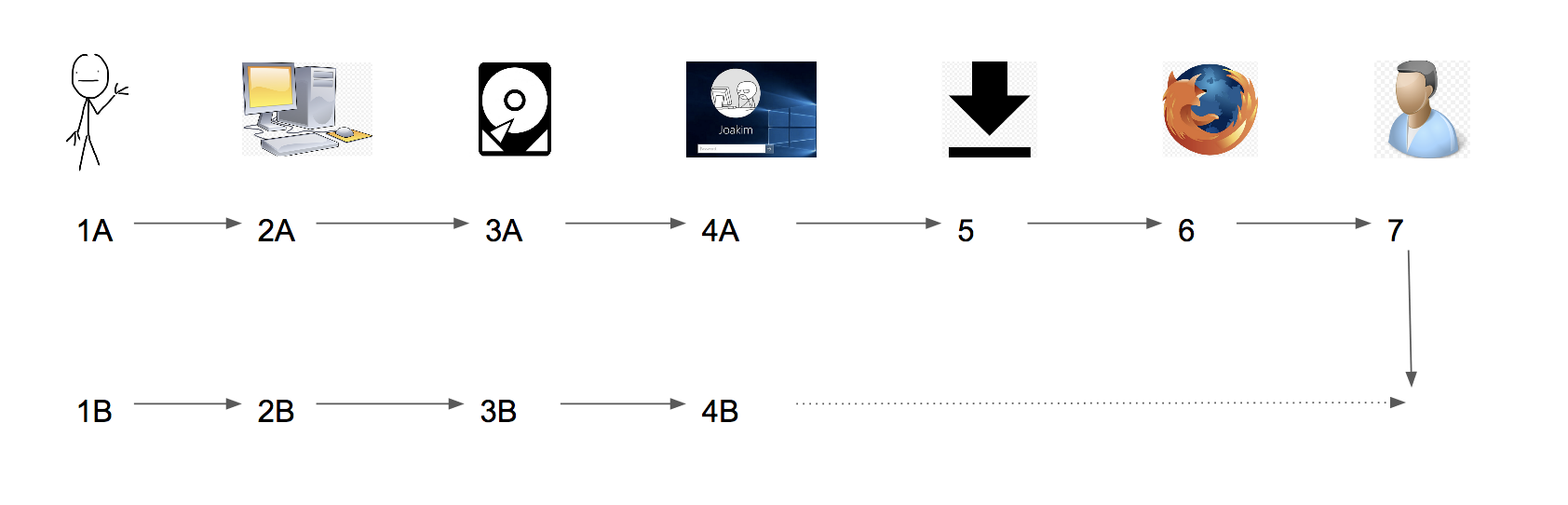

Mozilla’s data pipeline, which is used to collect Telemetry data from Mozilla’s products and logs from various services. The bulk of the data that is handled by this pipeline is Firefox Telemetry data. The same tool-chain is used to collect, store, and analyze data that comes from many sources.

For more information, see An overview of Mozilla’s Data Pipeline.

Probe

Measurements for a specific aspect of Firefox are called probes. A single telemetry ping sends many different probes. Probes are either Histograms (recording distributions of data points) or Scalars (recording a single value).

You can search for details about probes by using the Probe Dictionary. For each probe, the probe dictionary provides:

- A description of the probe

- When a probe started being collected

- Whether data from this probe is collected in the release channel

Newer measurements implemented using Glean are referred to as metrics instead of probes, but the basic outline is the same. Details about Glean Metrics are collected inside the Glean Dictionary.

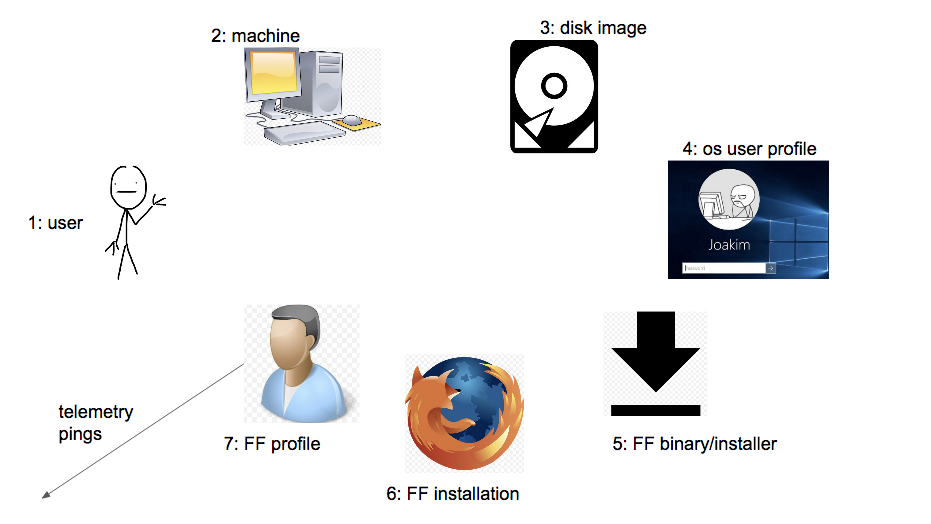

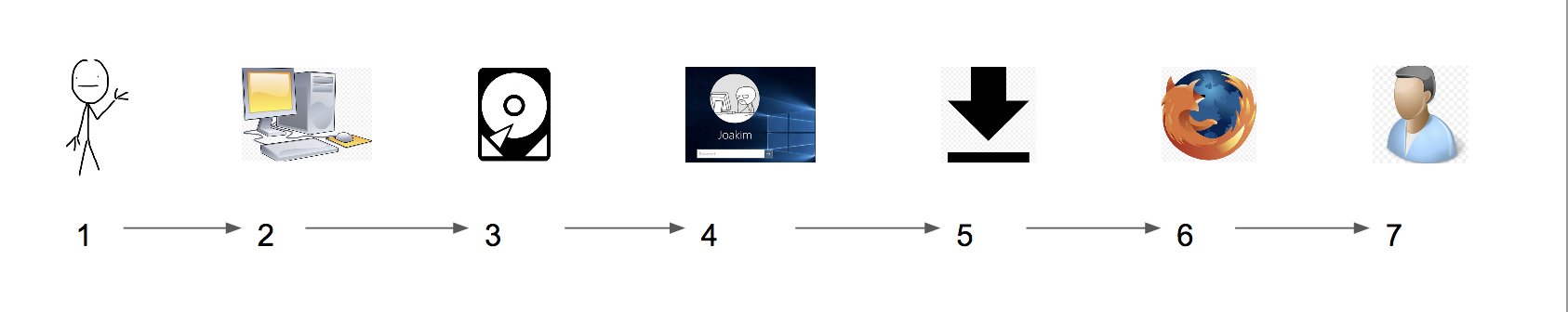

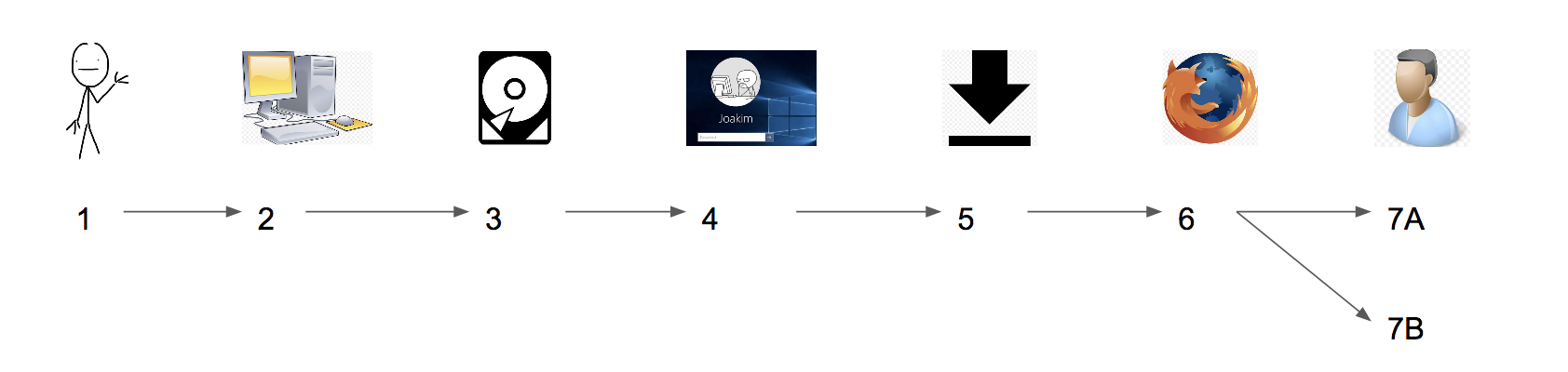

Profile

All of the changes a user makes in Firefox, like the home page, what toolbars you use, installed addons, saved passwords and your bookmarks, are all stored in a special folder, called a profile. Telemetry stores archived and pending pings in the profile directory as well as metadata like the client id. See also Profile Creation.

Query

Typically refers to a query written in the SQL syntax, run on (for example) STMO.

Retention

-

As in “Data retention” - how long data is stored before it is automatically deleted/archived?

-

As in “User retention” - how likely is a user to continue using a product?

Schema

A schema is the organization or structure for our data. We use schemas at many levels (in data ingestion and storage) to make sure the data we submit is valid and possible to be processed efficiently.

Session

The period of time that it takes between Firefox being started until it is shut down. See also subsession.

Subsession

In Firefox, Sessions are split into subsessions after every 24-hour time period has passed or the environment has changed. See here for more details.

STMO (sql.telemetry.mozilla.org)

A service for creating queries and dashboards. See STMO under analysis tools.

Telemetry

As you use Firefox, Telemetry measures and collects non-personal information, such as performance, hardware, usage and customizations. It then sends this information to Mozilla on a daily basis and we use it to improve Firefox.

URI

Uniform Resource Identifier - a string that refers to a resource. The most common are URLs, which identify the resource by giving its location on the Web (source).

URL

Uniform Resource Locator - a text string that specifies where a resource (such as a web page, image, or video) can be found on the Internet (source). For example, https://www.mozilla.org is a URL.

WAU

Weekly Active Users - The number of unique profiles active at least once during the 7-day window ending on the specified day.

Tutorials & Cookbooks

This section contains documentation describing how to perform specific tasks. It includes the following sections:

- Getting Started: How to get started.

- Analysis Cookbooks: Tutorials on analyzing data.

- Operational Cookbooks: Tutorials describing how to perform various operational tasks.

- Sending Telemetry: Tutorials on adding new Telemetry.

Getting started

This section contains some basic tutorials on how to get up and running with Mozilla's data.

Accessing Telemetry data

Public Data

Aggregated information on the Firefox user population (including hardware, operating system, and other usage characteristics) is available at the Firefox Public Data Report portal.

In addition, a set of curated datasets are available to the public for research purposes. See the public data cookbook for more information.

Non-public Data

Access to other Telemetry data is limited to two groups:

- Mozilla employees and contractors

- Contributors who have signed a non-disclosure agreement, have a sustained track record of contribution, and have a demonstrated need to access this data

If you are an employee or contractor, you should already have the necessary permissions.

If you are a contributor and want to request access to Mozilla Telemetry data, file a bug in the operations component and ask an established Mozilla contributor or employee to vouch for you.

Getting Help

Mailing lists

Telemetry-related announcements that include new datasets, outages, feature

releases, etc. are sent to fx-data-dev@mozilla.org, a public

mailing list. Follow the link for archives and information on how to subscribe.

Matrix

You can locate us in the #telemetry:mozilla.org channel on Mozilla's instance of matrix.

Slack

You can ask questions (and get answers!) in #data-help on Mozilla Internal's

Slack. See also #data for general data-related discussion.

Reporting a problem

If you see a problem with data tools, datasets, or other pieces of infrastructure, report it!

Defects in the data platform and tools are tracked in Bugzilla in the Data Platform and Tools product.

Bugs need to be filed in the closest-matching component in the Data Platform and Tools product. If you are not able to locate an appropriate component for the item in question, file an issue in the General component.

Components are triaged at least weekly by the component owner(s). For any issues that need

urgent attention, it is recommended that you use the needinfo flag to attract attention

from a specific person. If an issue does not receive the appropriate attention in a

week (or it is urgent), see getting help.

When a bug is triaged, it is assigned a priority and points. Priorities are processed as follows:

P1: in active development in the current sprintP2: planned to be worked on in the current quarterP3: planned to be worked on next quarterP4and beyond: nice to have, would accept a patch, but not actively being worked on.

Points reflect the amount of effort that is required for a bug. They are assigned as follows:

- 1 point: one day or less of effort

- 2 points: two days of effort

- 3 points: three days to a week of effort

- 5 points or more: SO MUCH EFFORT, major project.

Problems with the data

There are Bugzilla components for several core datasets, as described in this documentation. If at all possible, assign a specific component to the issue.

If there is an issue with a dataset to which you are unable to assign its own component, file an issue in the Datasets: General component.

Problems with tools

There are Bugzilla components for several of the tools that comprise the Data Platform. File a bug in the specific component that most closely matches the tool in question.

Operational issues, such as services being unavailable, need to be filed in the Data SRE Jira Project.

- The ticket should contain the following information:

- Service details

- Steps to reproduce

- Impact to users

Other issues

When in doubt, file issues in the General component.

Analysis

This section contains tutorials on how to analyze Telemetry data.

Data Analysis Tools

This section covers data tools that you can use for discovering what data is available about our products.

The Data Catalog

Reference material for data assets (tables, dashboards, pings, etc.) can primarily be found in the Data Catalog: https://mozilla.acryl.io. It provides an automatically updated "map" of data assets, including lineage and descriptions, without the need for manual curation.

What do I use it for?

The primary use case for the catalog is finding out (at a glance) which data assets exist and how they relate to one another. A few examples:

- Finding the source ping or table from a Looker dashboard.

- Finding out whether a source ping or table has any downstream dependencies.

- Getting a high-level overview of how tables are transformed before data shows up in a dashboard.

- Tracing a column through various BigQuery tables.

- Finding the source query or DAG that powers a particular BigQuery table.

How do I use it?

Navigate to https://mozilla.acryl.io and log in via SSO. Once logged in, you should be able to explore assets via the search bar or by clicking on a platform (e.g. BigQuery or Glean).

When was this implemented?

We tested a number of tools in 2022 and finally settled on Acryl. Integration work proceeded from there and continues as we add more tools and assets to our data platform.

Is the Data Catalog a replacement for tools like the Glean Dictionary or the Looker Data Dictionary?

No. While the features between the Data Catalog and tools such as the Glean Dictionary and Looker Data Dictionary overlap, the Data Catalog is meant to be less focused on any single tool and more on assets from all the tools in our data platform, providing lineage and reference material that links them together.

What software does it use?

The catalog is a managed version of open source DataHub, a metadata platform built and maintained by the company Acryl.

How is the metadata populated?

Metadata is pulled from each included platform. Depending on the source, metadata ingestion is either managed in the Acryl UI or via our custom ingestion code:

- Glean - Pings are ingested from the Glean Dictionary API. This is scheduled nightly in CircleCI. The ingestion code is located in the mozilla-datahub-ingestion repository.

- Legacy Telemetry - Pings are ingested from the Mozilla Pipeline Schemas repository.This is scheduled nightly in CircleCI. The ingestion code is located in the mozilla-datahub-ingestion repository.

- BigQuery - Views, Tables, Datasets, and Projects are ingested from the BigQuery audit log and query jobs. This is scheduled nightly in the Acryl UI. The documentation can be found on The DataHub docs page.

- Looker - Views, Explores, and Dashboards are ingested from both our LookML source repositories (e.g. spoke-default) and the Looker API. This is scheduled nightly in the Acryl UI. The documentation can be found on the DataHub docs page.

- Metric-Hub - Metrics are ingested from the metric-hub repository and loaded into the Business Glossary. This is scheduled nightly in CircleCI. The ingestion code is located in the mozilla-datahub-ingestion repository and the documentation can be found on the DataHub docs page.

Using the Glean Dictionary

The Glean Dictionary is a web-based tool that allows you to look up information on all the metrics1 defined in applications built using Glean, Mozilla's next-generation Telemetry SDK. Like Glean itself, it is built using lessons learned in the implementation of what came before (the probe dictionary in this case). In particular, the Glean Dictionary is designed to be more accessible to those without deep knowledge of instrumentation and/or data platform internals.

How to use

You can visit the Glean Dictionary at dictionary.telemetry.mozilla.org.

As its content is generated entirely from publicly available source code, there is no access control.

From the top level, you can select an application you want to view the metrics for.

After doing so, you can search for metrics by name (e.g.: addons.enabled_addons), type (e.g.: string_list), or tags (e.g. WebExtensions).

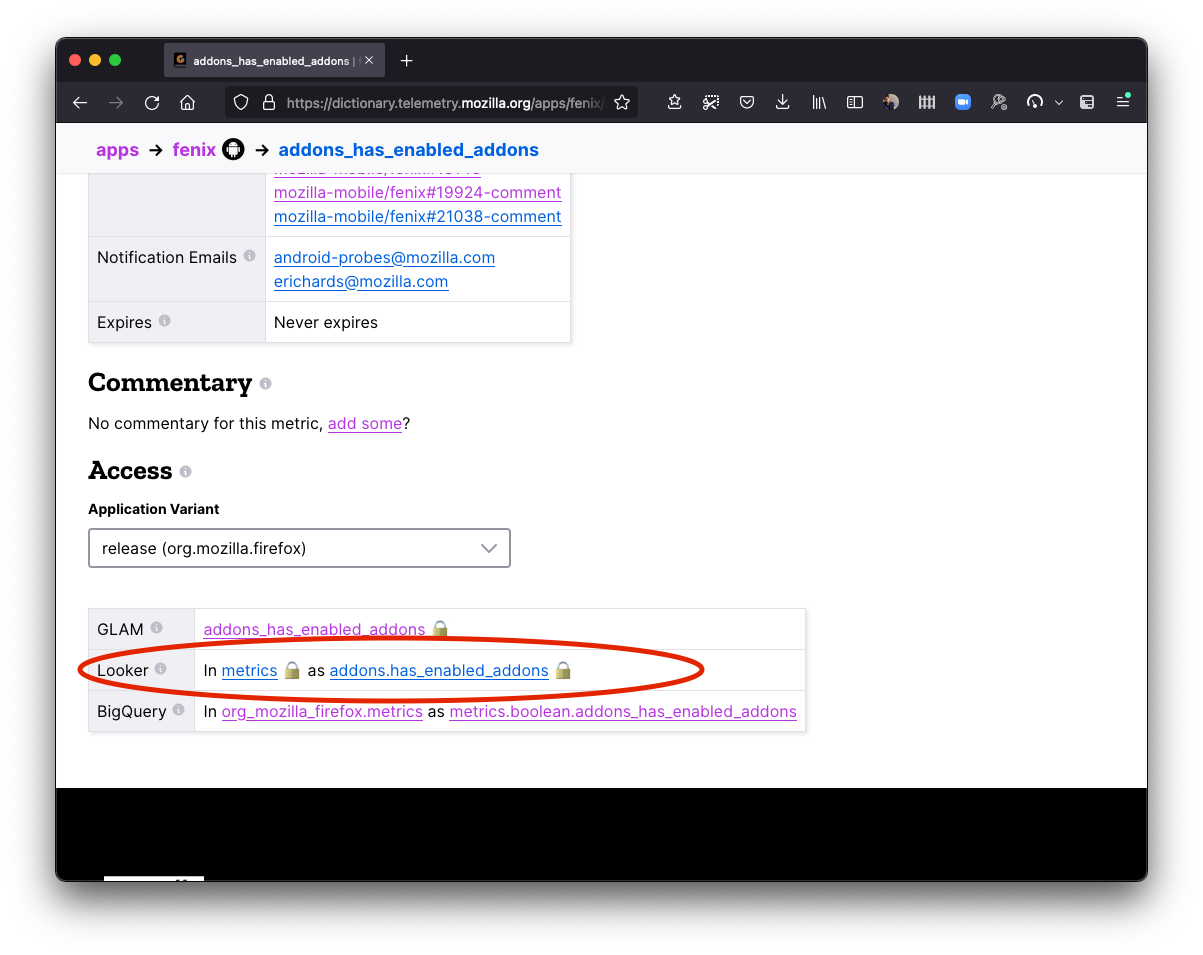

After selecting a metric, you can get more information on it including a reference to its definition in the source code as well as information on how to get the data submitted by this probe in some of our data tools like STMO, Looker, and GLAM.

Common Questions

How can I go from a metric to querying it in BigQuery?

Underneath the metric definition, look for the section marked "access". This should tell you the BigQuery table where the data for the metric is stored, along with the column name needed to access it.

For several examples of this along with a more complete explanation, see Accessing Glean Data.

Note that Glean refers to "probes" (in the old-school Firefox parlance) as "metrics".

Using the Probe Dictionary

The Probe Dictionary is a web-based tool that allows you to look up information on all the probes defined in Firefox's source code. Until Firefox on Glean is finished, the Probe Dictionary is the best way to look up what data is submitted by Firefox.

Note that the Probe Dictionary has not kept pace with many changes that have made to the Mozilla data platform in the last couple of years. However, with some knowledge of how Firefox and the data platform work, you can still quickly find the data that you need. If you have questions, don't hesitate to ask for help.

How to use

You can visit the Probe Dictionary at probes.telemetry.mozilla.org.

As its content is generated entirely from publicly available source code in Firefox, there is no access control.

From the top level, you can search for a probe by name, descriptive, or other category by entering the appropriate text in the search box.

If you click on a probe, you can get more information on it including a reference to its definition in the source code as well as information on how to get the data submitted by this probe in some of our data tools like STMO and the Telemetry Dashboard.

Common Questions

How can I tell if a probe is still active?

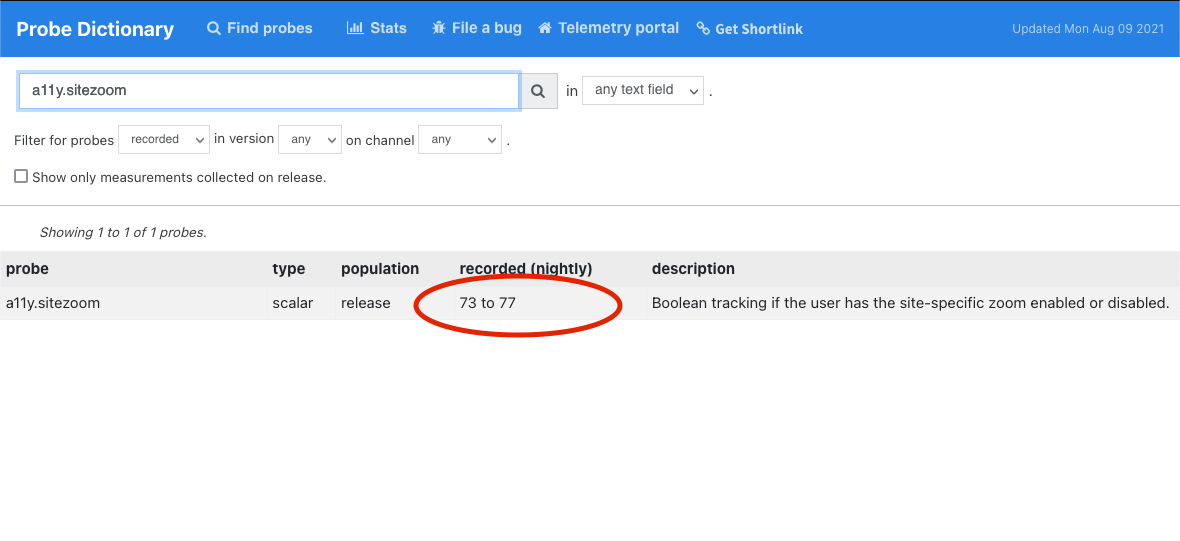

Look at the "recorded (nightly)" column after the probe definition in the summary.

If it gives a range and it ends before the current release, the probe is not active anymore.

For example, the a11y.sitezoom probe was only recorded in Nightly from Firefox 73 to 77.

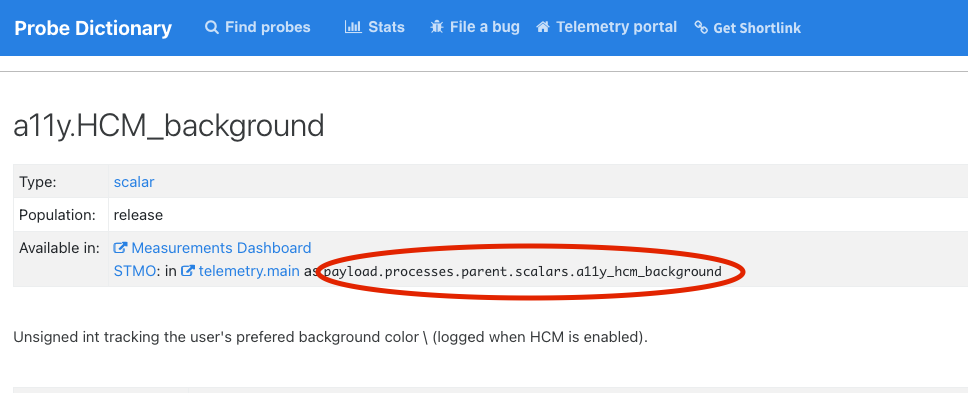

How can I go from a probe to querying it in BigQuery?

Look in the "available in" section underneath the probe.

You can use this information to query the data submitted by the probe in BigQuery using STMO or other tools.

For example, for example this query gives you the counts of the distinct values for a11y.hcm_background:

SELECT payload.processes.parent.scalars.a11y_hcm_background AS a11y_hcm_background,

count(*) AS COUNT

FROM telemetry.main_1pct

WHERE DATE(submission_timestamp)='2021-08-01' group by 1;

Other measurement types may require more complicated queries. For information on querying exponential histograms, see Visualizing Percentiles of a Main Ping Exponential Histogram.

Note that the metric's information may also appear in derived datasets, not just the raw ping tables which we are talking about above. For more information on this (and how to explore data stored in derived data sets), see Accessing Desktop Data.

For keyed scalars, how can I find out what the keys mean?

First, check the probe description: basic documentation is often there. For example, in the a11y.theme probe it says:

OS high contrast or other accessibility theme is enabled. The result is split into keys which represent the values of browser.display.document_color_use: "default", "always", or "never".

If this is not given, your best option is probably to look at the Firefox source code using Searchfox (a link to a sample query is provided by Probe Dictionary). Again, feel free to ask for help if you need it.

Introduction to Bigeye (Data Observability Platform)

Mozilla uses Bigeye as its data observability platform to ensure high data quality and reliability across its pipelines. Bigeye offers powerful features like automated anomaly detection, detailed data lineage tracking, and customizable monitoring. These capabilities allow teams to swiftly identify, diagnose, and resolve data issues, enhancing overall data integrity and operational efficiency.

Accessing Bigeye

You can access Mozilla's instance of Bigeye at app.bigeye.com. If you do not have the necessary access or permissions, please submit a Jira ticket.

Getting Started

Watch the Bigeye tutorial to get an overview of the platform. Please refer to additional helpful videos available on the Bigeye channel.

Stay Updated on What's Happening

Have an issue or looking for a new feature in Bigeye

If you're experiencing any issues while using the issues tracker for Bigeye

Bigeye Interface

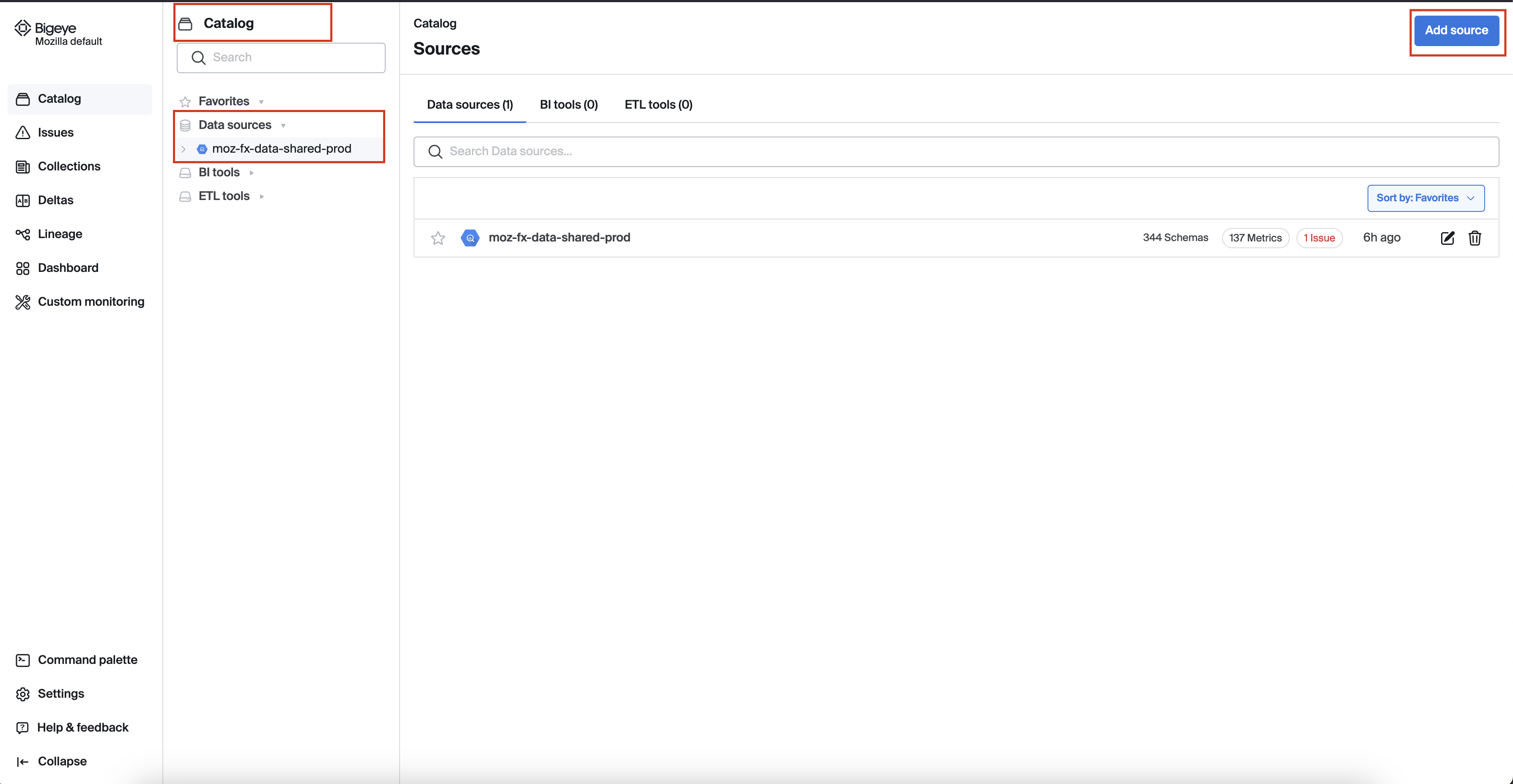

Catalog

The Catalog tab in the left-hand menu offers a comprehensive view of all data sources connected to Bigeye, making it simple to navigate and manage your entire data ecosystem.

If you are an admin, you will have access to the "Add Source" button, allowing you to easily integrate new data sources and BI tools.

The Bigeye catalog refreshes automatically every 24 hours to detect new datasets and schema changes. You can also manually refresh the catalog anytime by clicking 'Rescan' on the schema changes tab.

For more detailed information about the Catalog, please refer to the Catalog documentation page

Watch the Bigeye tutorial on how to navigate Bigeye Catalog.

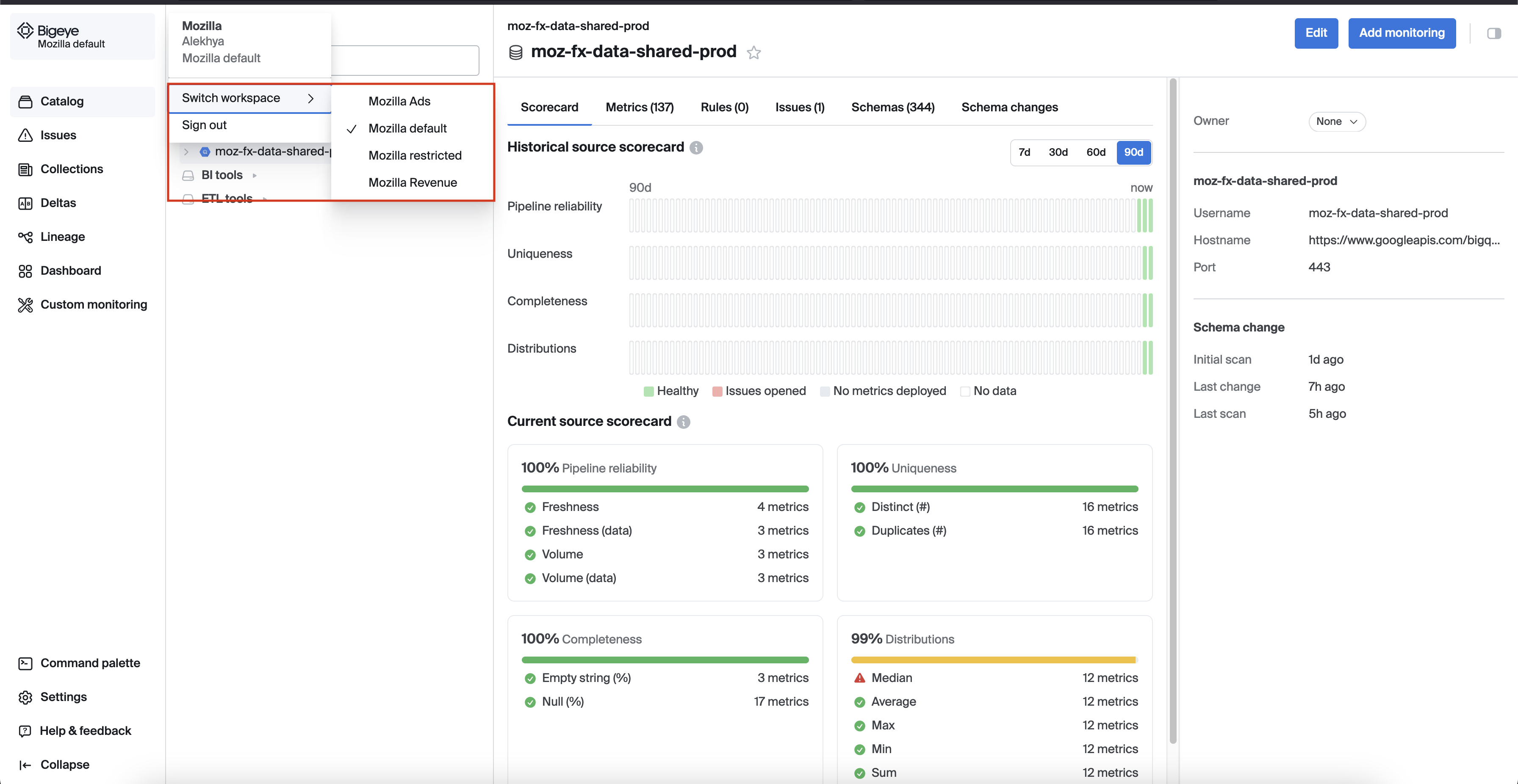

Workspaces

Workspaces in Bigeye allow multiple teams to collaborate simultaneously, with each team managing and monitoring their own data independently. Each Bigeye workspace includes its own Catalog, BI tools, and ETL tools, Metrics and issues, Templates and schedules, Collections, Deltas.

We are in the process of setting up user workspaces that will align with our existing data access restrictions.

If you do not find a suitable workspace, please submit a Jira ticket

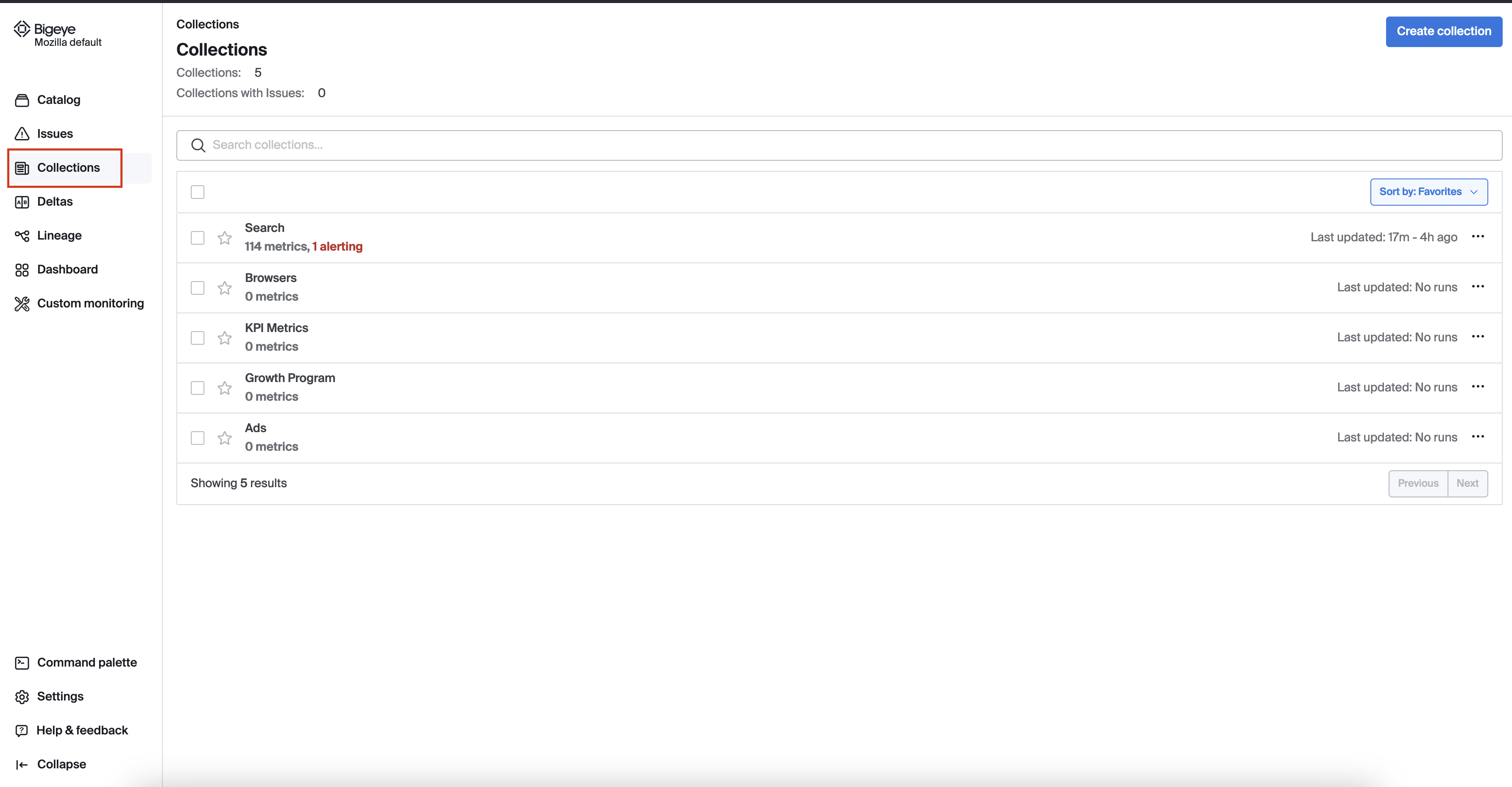

Collections

Collections in Bigeye allow you to group related metrics, making it easier to manage and monitor them together.

If you don’t find a collection that meets your product or requirements, admins can create a new collection. If you're not an admin, please submit a Jira ticket with the necessary details.

Watch the Bigeye tutorial on how to navigate Bigeye Catalog.

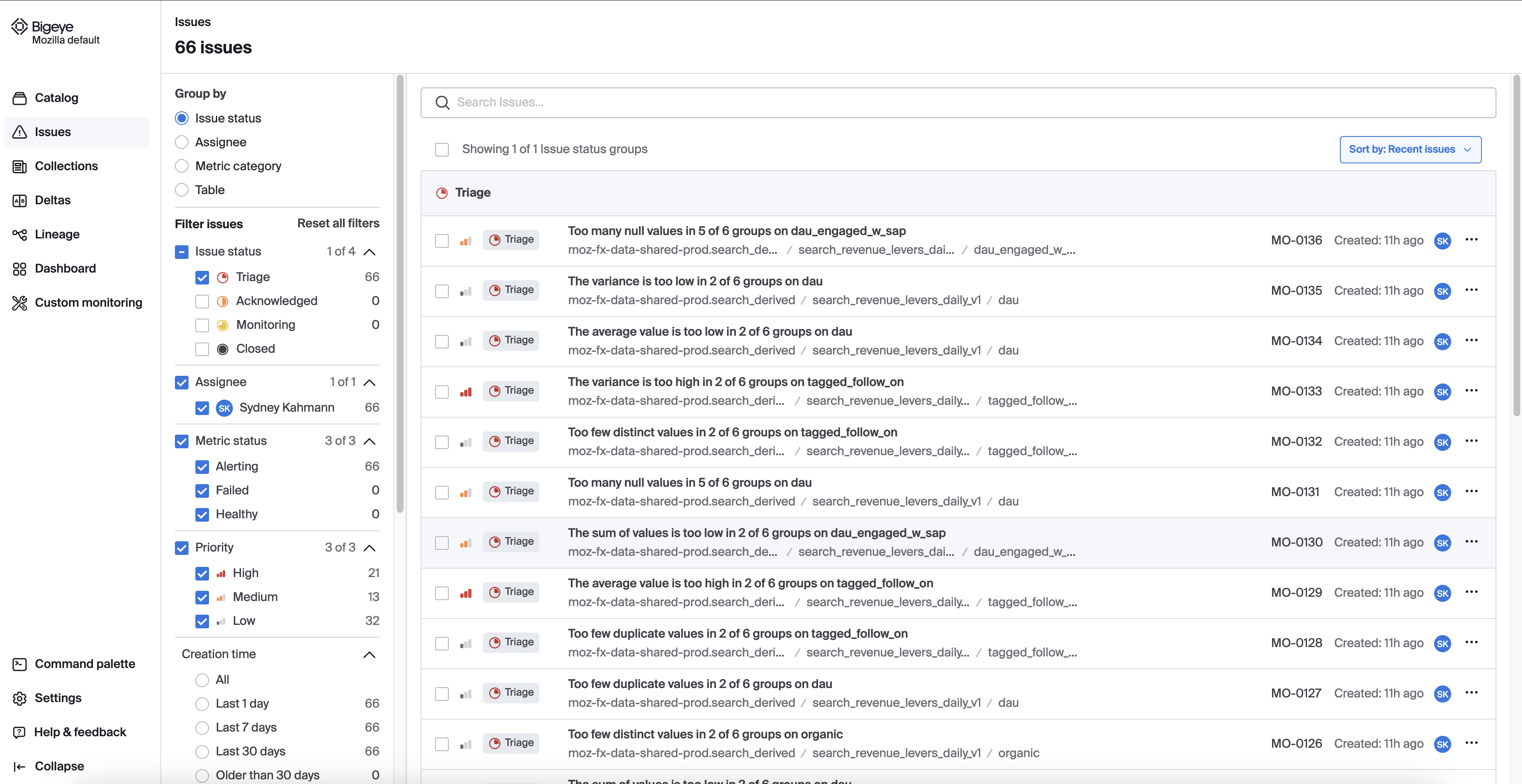

Issues

Bigeye's Issues feature helps you track and manage data quality issues detected by the platform. You can assign, prioritize, and resolve issues within the platform, ensuring that your data quality remains high. Issues can be categorized and filtered to streamline the resolution process across teams.

For more details, refer to the Bigeye documentation on Issues page

Dashboard

Users can monitor the data quality metrics and issues in a centralized view. It highlights key features such as customizable widgets, real-time metric tracking, and the ability to visualize data health at a glance. Users can configure dashboards to focus on specific metrics or tables and receive immediate insights into their data pipelines' performance.

For additional guidance on using Bigeye Dashboard, please refer to the following documentation:

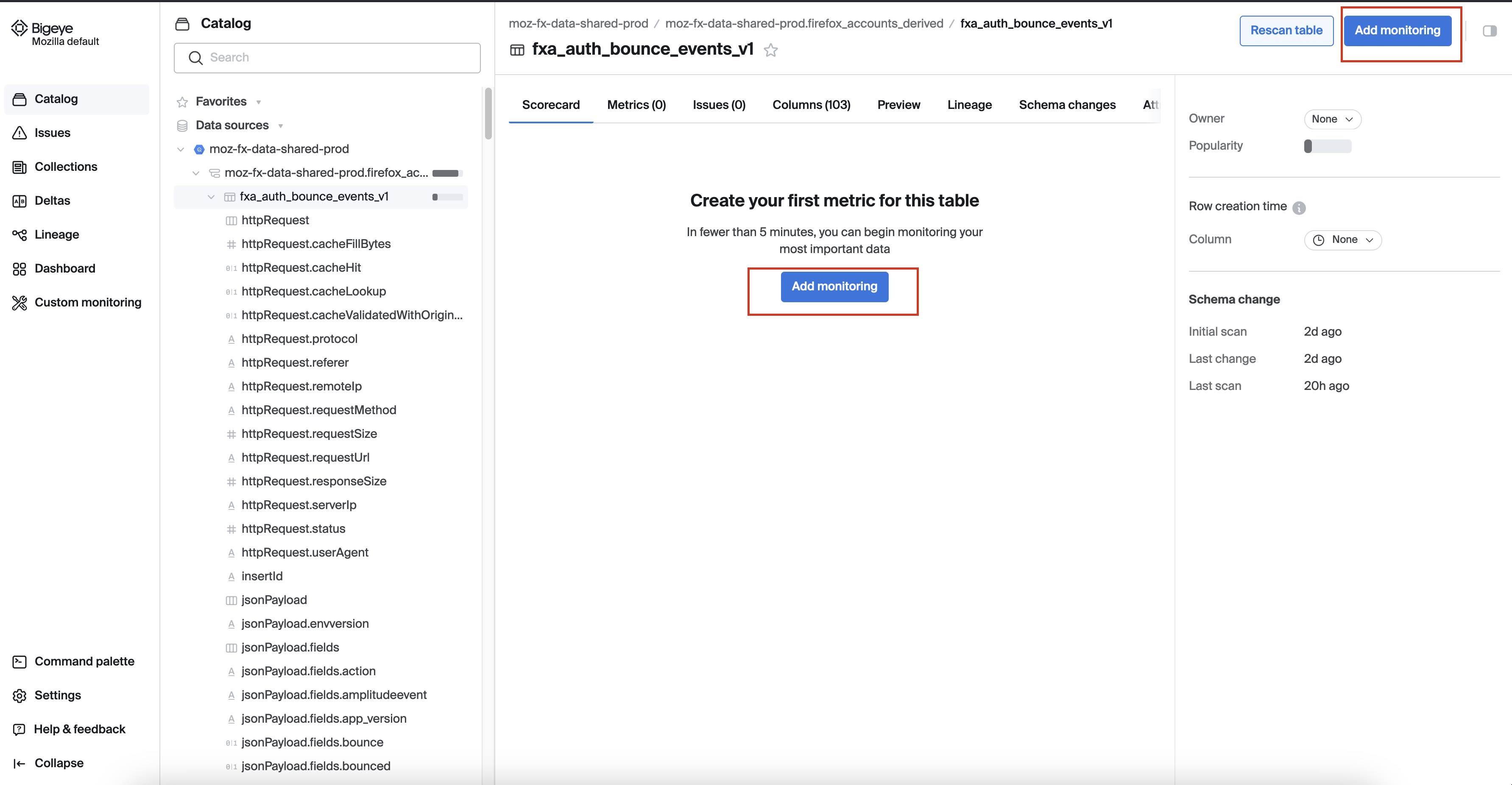

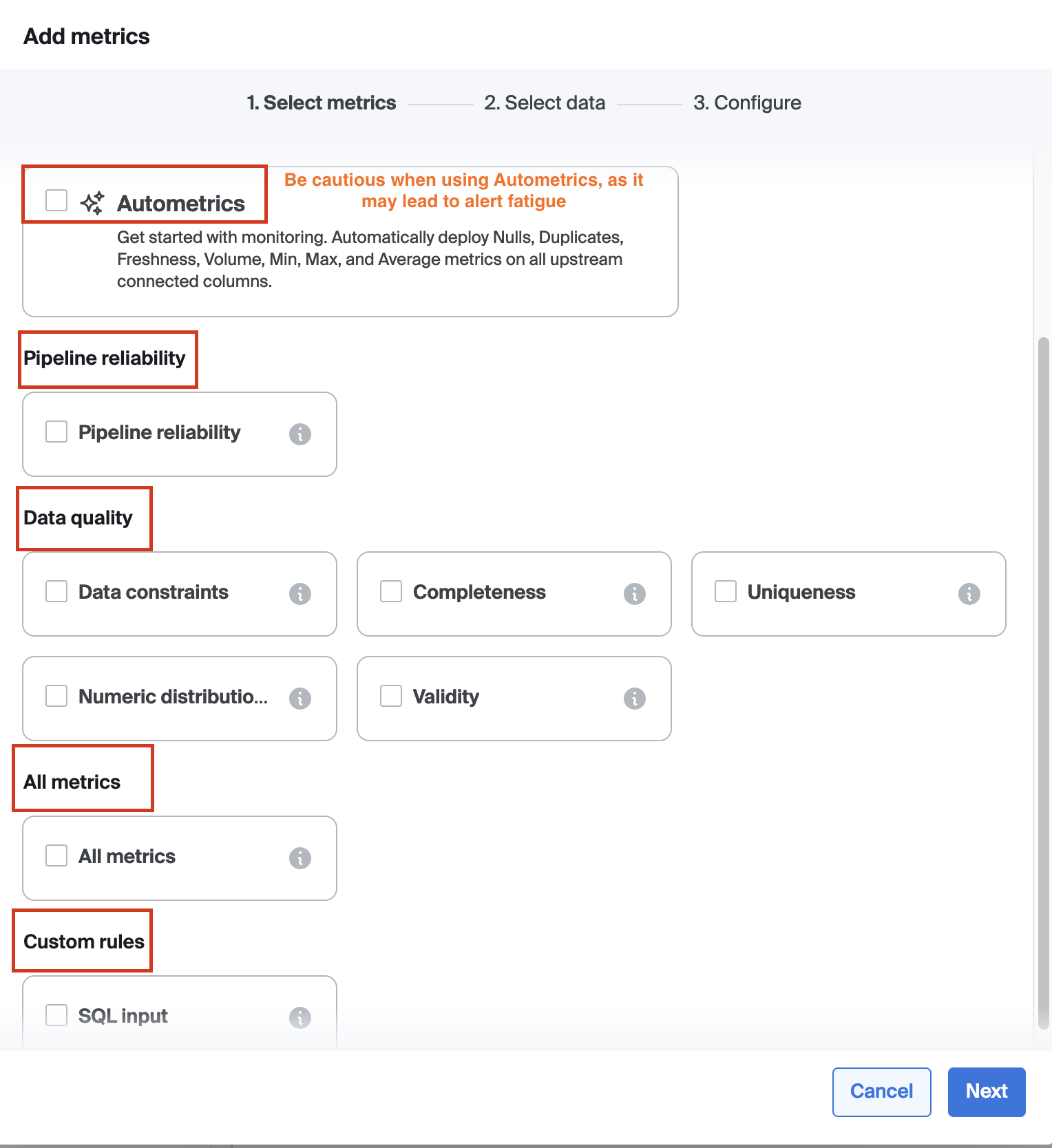

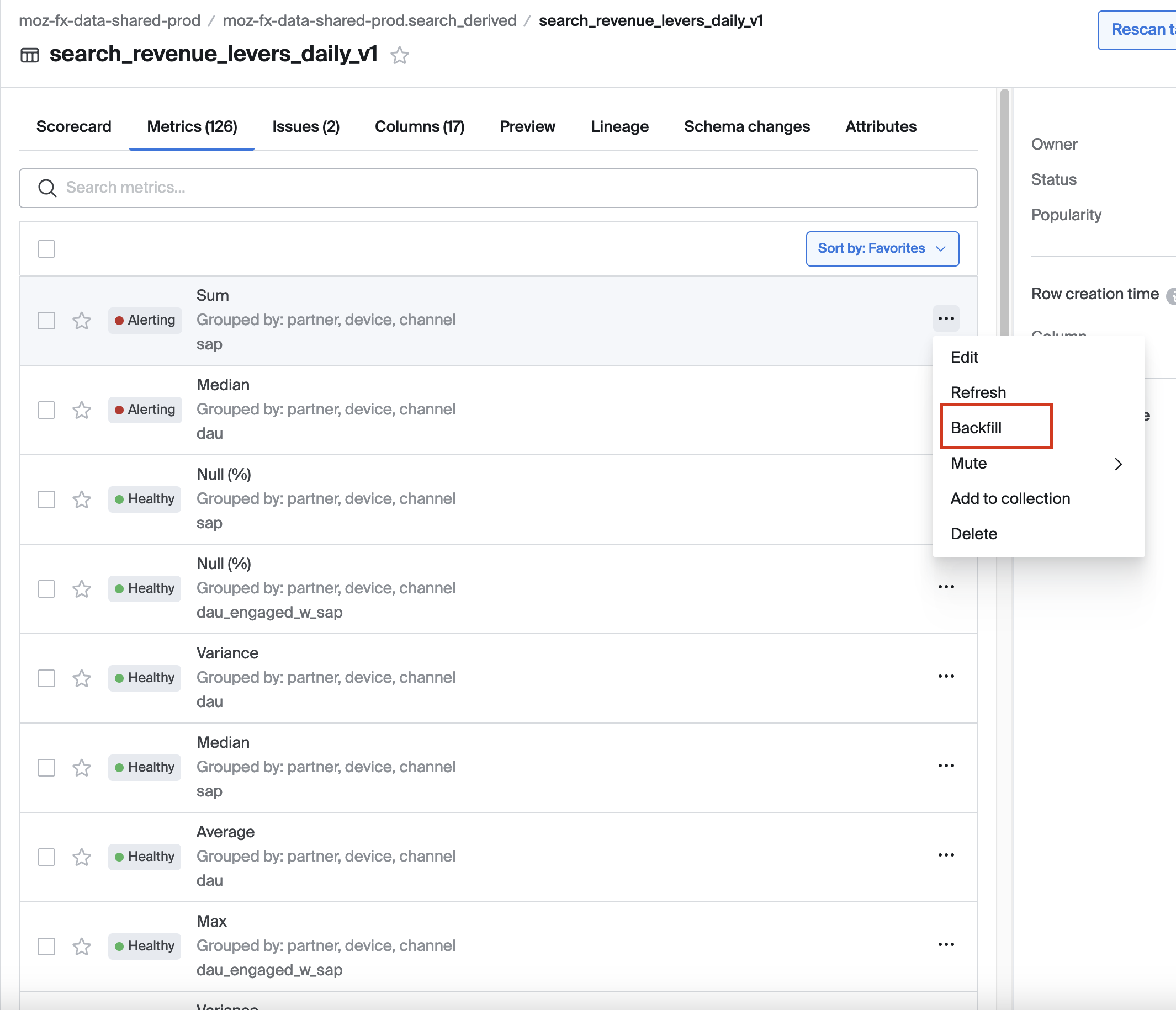

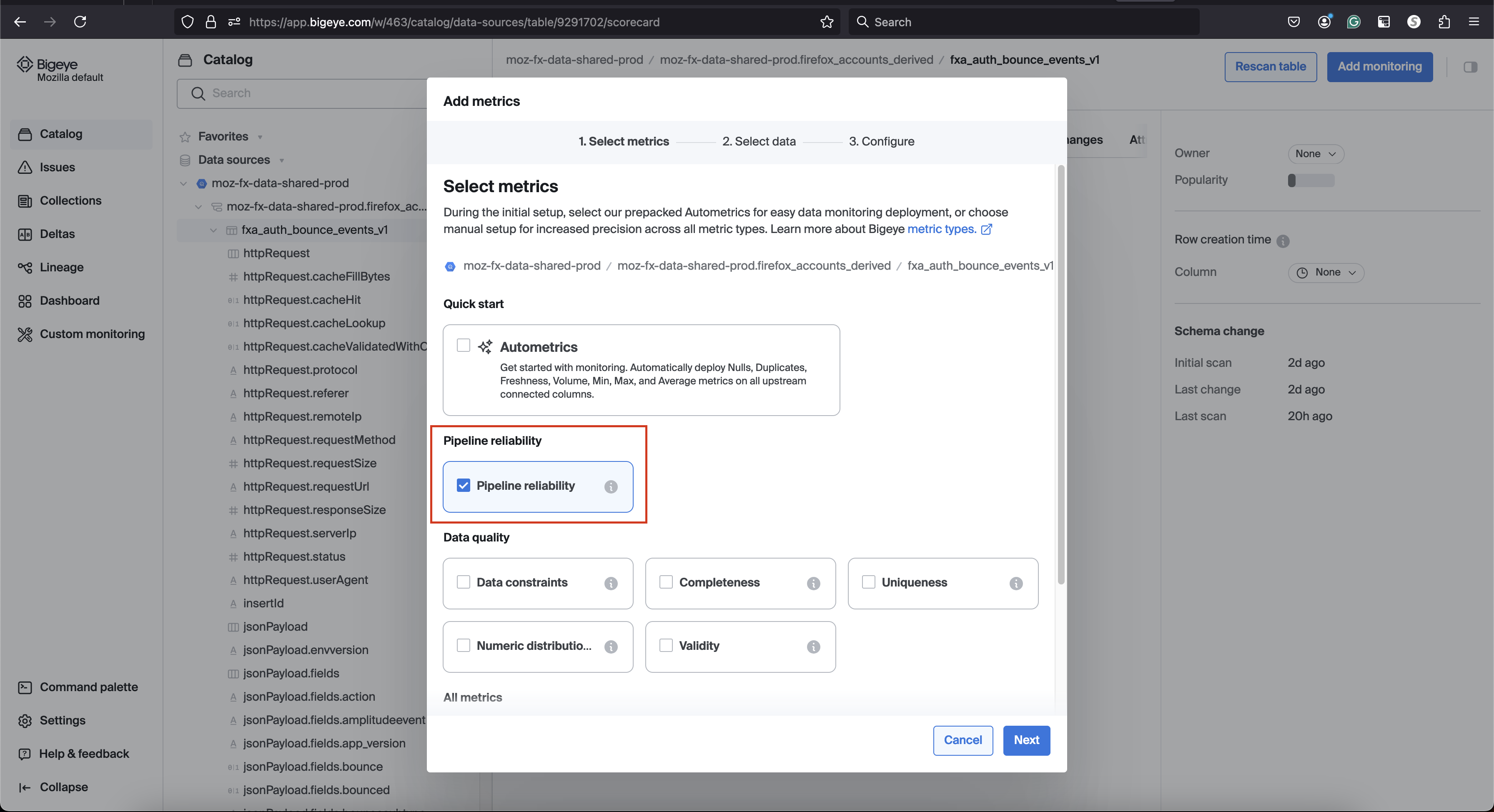

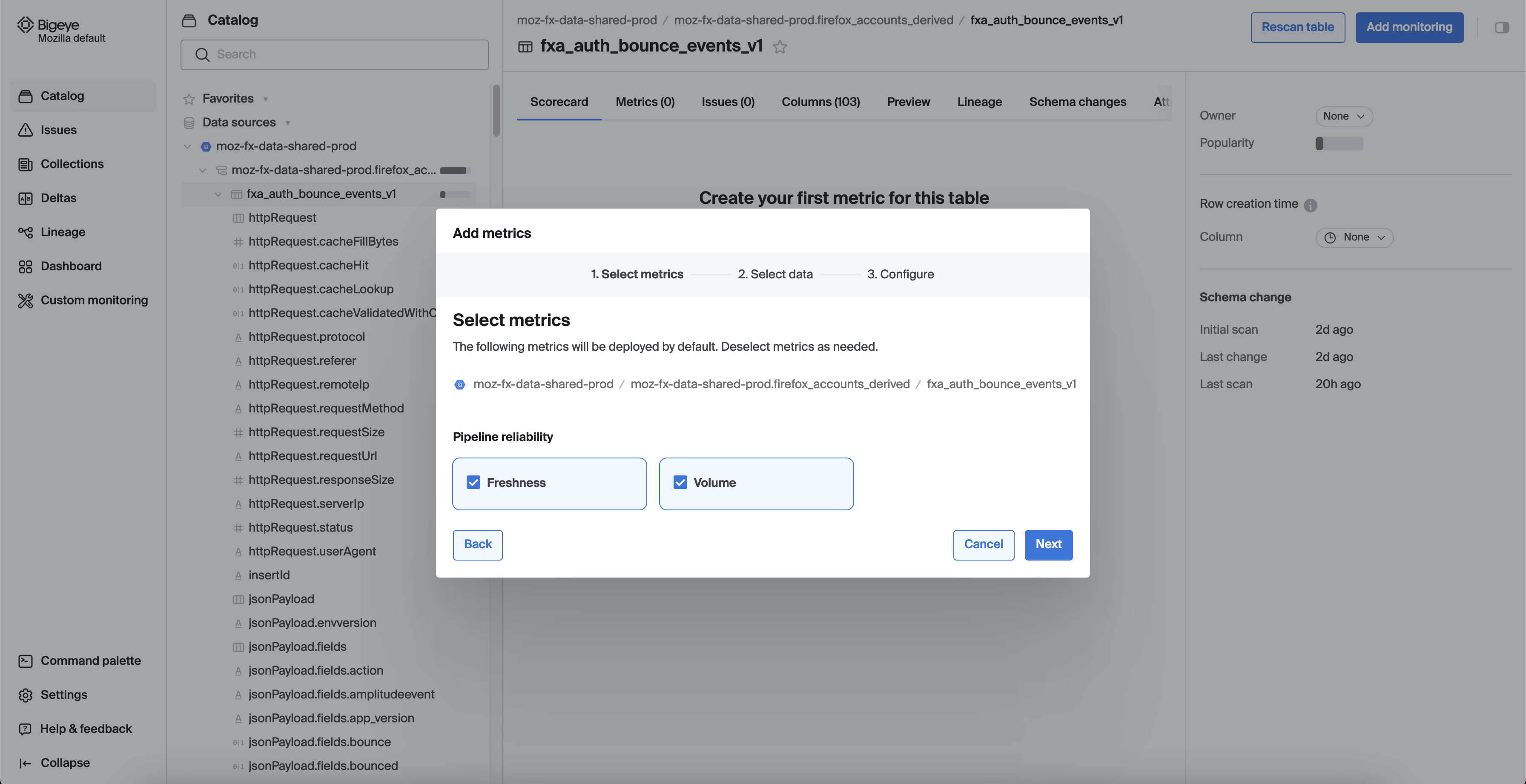

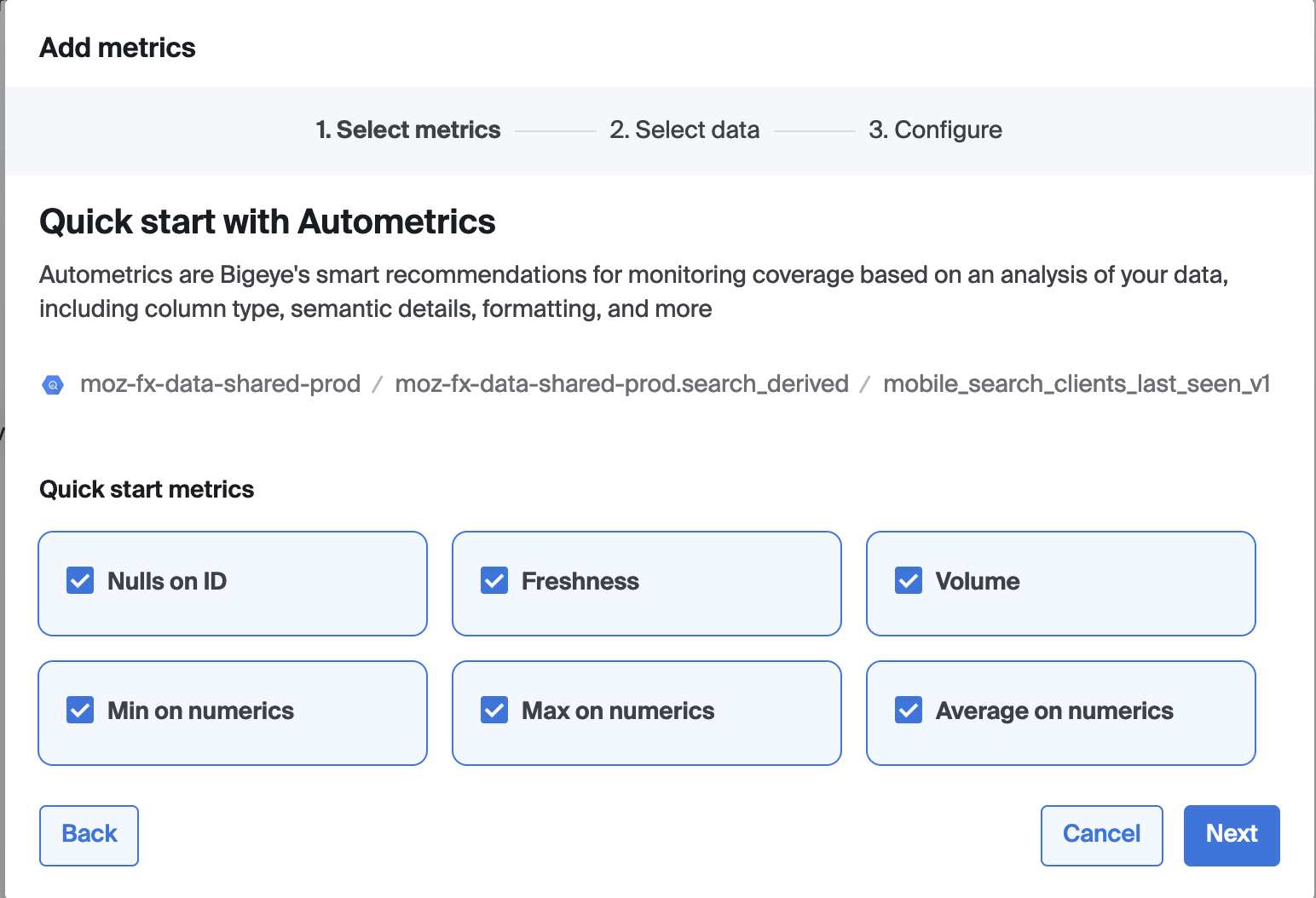

Deploying Metrics

To deploy metrics in Bigeye, navigate to a schema, table, or column and click "Add Monitoring." You can choose to add metrics via 4 options

- Freshness and Volume (Pipeline Reliability)

- Data Quality

- All metrics

- Custom SQL, and select the metrics you wish to deploy.

Next, choose the columns to monitor, set schedules, thresholds, and filters, and confirm your selections. You can assign the metrics to the relevant collection, grouping related metrics for easier management and monitoring. Once deployed, the metrics appear under the Metrics tab on the relevant schema, table, or column page. We can backfill the metrics for up to 28 days in the past.

For more details, please refer to the Bigeye documentation on how to deploy metrics.

Watch the Bigeye tutorial on how to use the metrics page

Freshness and Volume (Pipeline Reliability)

Bigeye tracks data quality by monitoring the timeliness (freshness) and completeness (volume) of your data and checks them hourly.

Initially, it looks back 28 days, then 2 days for subsequent runs. For volume, it aggregates row counts into hourly buckets, using the same lookback periods. We have an option to select manual thresholds vs Autothresholds that learn typical patterns and alert on anomalies.

Only one Freshness and one Volume metric can be deployed per table. Cost Consideration Freshness and Volume metrics are included by default for each table and are free of charge.

Please refer to Bigeye documentation for more details on Freshness and Volume metrics.

List of available metrics

Bigeye offers a range of available metrics to monitor data quality and reliability across your data pipelines. These metrics cover areas such as data freshness, volume, distribution, schema changes, and anomalies. You can deploy these metrics to track key performance indicators and ensure your data meets expected standards.

Please refer to the Bigeye documentation for list of available metrics.

Watch the Bigeye tutorial on the metrics types

Autometrics

Autometrics are suggested metrics that monitor anomalies in column-level data, automatically generated for all new datasets in Bigeye. They can be found under the Autometrics tab in the Catalog when viewing a source, schema, table, or column page.

Try to avoid this option!: On tables with many columns a large number of monitors might get deployed. This increases noise and cost. Instead, it is recommended to choose relevant metrics from the list of available metrics manually.

Custom SQL

Custom rules are useful for addressing unique data quality requirements that standard metrics may not cover. Once set, these rules integrate into your monitoring workflow.

Recommendations / Best Practices to deploying metrics

-

It's recommended to avoid deploying Autometrics extensively, as this could result in a high signal-to-noise ratio, leading to unnecessary alerts and potential distraction.

-

When deploying metrics on search tables, we observed that the

mediancalculation using the BigQuery function does not work as expected. Due to this limitation, it is recommended to avoid using the median metric in these scenarios to ensure accurate results. -

Autothresholds are recommended for freshness and volume metrics, as they automatically adjust based on typical patterns. For other metrics, it's advisable to manually set thresholds to ensure accuracy and relevance.

-

It is recommended to add metrics at the table level. This ensures that checks run as closely to the source as possible. Also, cost of running checks on tables is usually much lower in Bigeye compared to running them on views as Bigeye makes use of the partition configurations.

Collections in Bigeye

Collections help you organize and focus on specific areas of interest, making it simpler to track and address data quality across different segments of your data landscape. This feature enhances efficiency by allowing users to monitor grouped entities in a cohesive manner.

Creating a new collection

If you don’t find a collection that meets your product or requirements, admins can create a new collection.

If you're not an admin, please submit a Jira ticket with the necessary details.

Adding metrics to a collection

To add metrics to a collection in Bigeye, navigate to the collection you want to update and click "Add Metrics." You can search or filter for specific metrics that align with your monitoring goals.

Adding notifications to a collection

One useful feature of collections is the ability to add notifications. To set this up, click the "Edit" button, then navigate to the "Notifications" tab in the modal that appears.

Issues management

The Issues tab allows filtering issues by parameters like severity or date, and reviewing details such as impacted metrics and tables.

View issue details

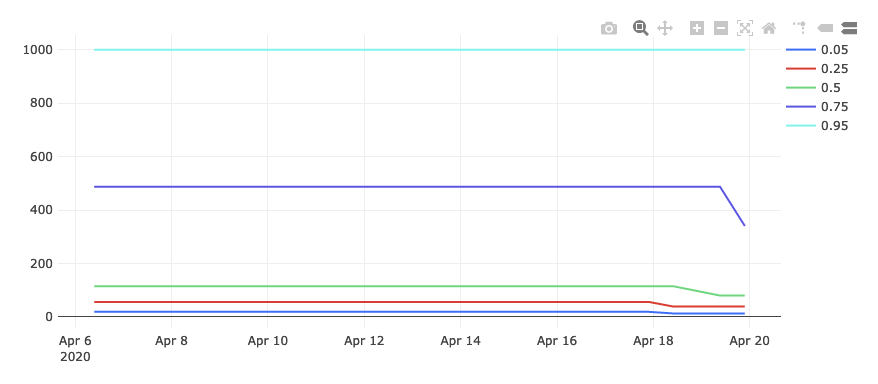

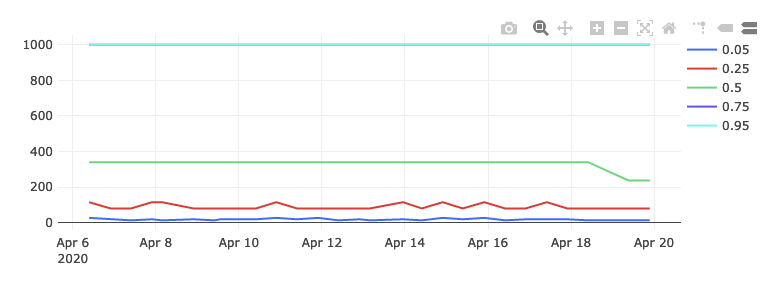

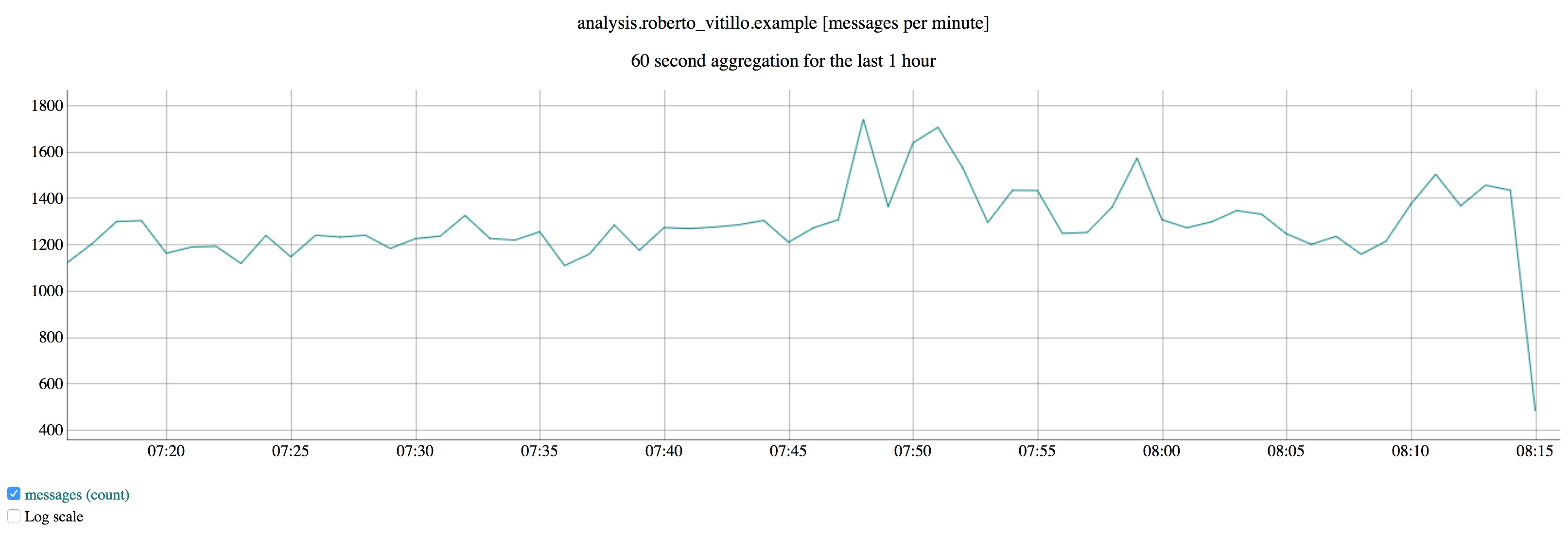

Once we click on the issue we can view a metric chart that displays a time series visualization of the alerting metric.

The metric chart in Bigeye displays a time series visualization of the alerting metric

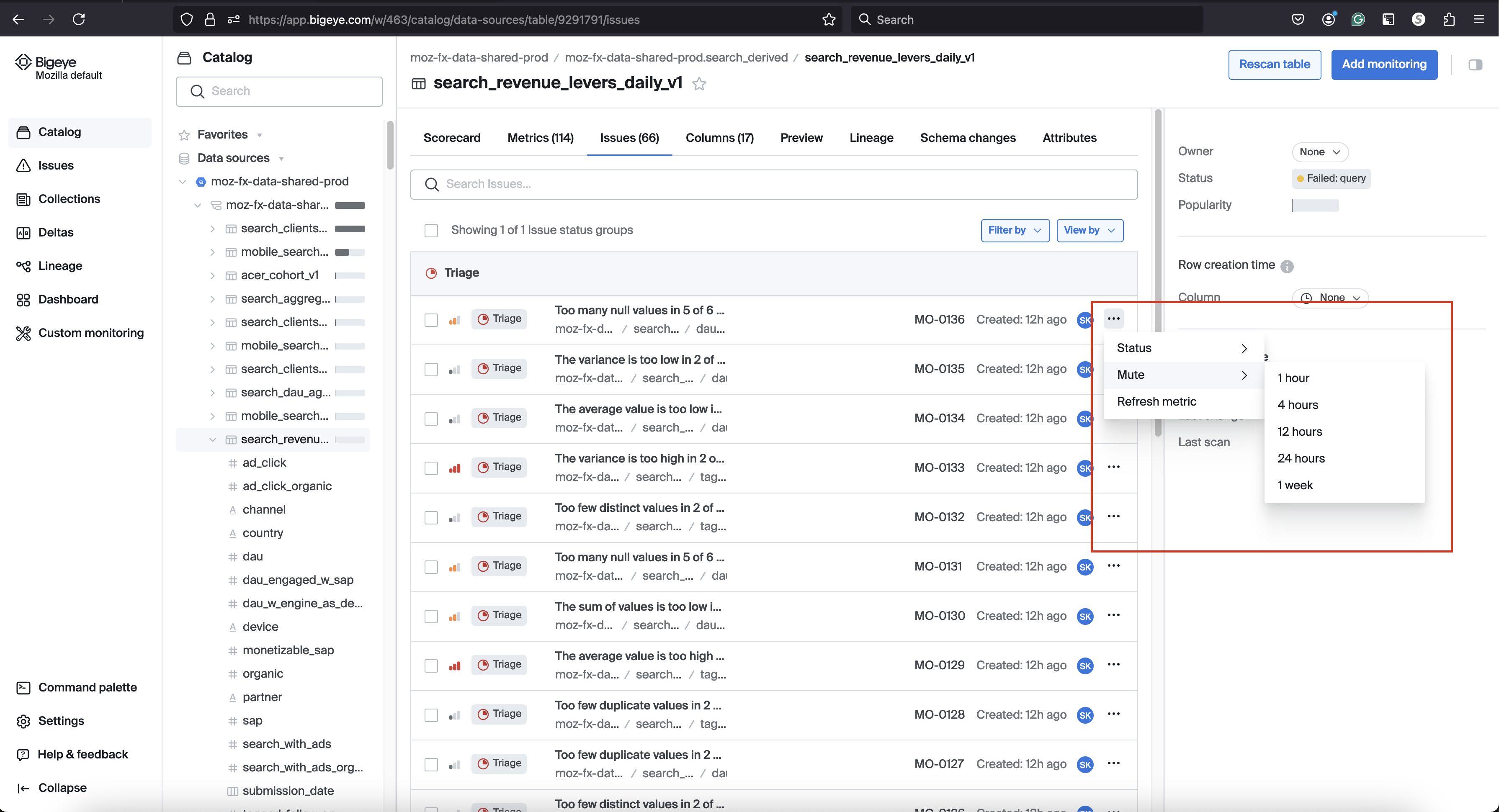

Status of Issue

Users can change the status to "Active," "Resolved," "Muted," or "Dismissed," depending on the issue's current state. This allows for better tracking and management of issues across data pipelines. Status updates are reflected in the timeline, providing a clear history of the issue's progression.

Use the Mute button above the timeline to mute an issue and stop being notified about subsequent issue alerts. The available mute options in the Bigeye are Unmute, 1 hour, 4 hours, 12 hours, 24 hours, or 1 week

For more details, refer to the Bigeye documentation.

Debug

Use the queries in the Debug tab to troubleshoot your issue.

Jira Integration

The Bigeye integration with Jira enables teams to track critical data quality issues seamlessly through Jira's flexible interface. The bi-directional integration ensures that updates made in either Jira or Bigeye are synced across both systems. Once a Jira ticket is created in Bigeye, any status changes or comments are automatically reflected in both platforms, keeping all team members informed.

Slack Integration [Coming Soon]

Bigeye enables users to take direct actions on issues on Slack messages without needing to navigate to the Bigeye web interface. Users can resolve, mute, or dismiss alerts directly from Slack messages, ensuring efficient workflows and quick responses to data quality issues.

Cost considerations

The Freshness and Volume metrics, which represent Pipeline Reliability, are included in the free tier. There is no charge when these metrics are added to a table.

However, if additional monitors are added to a table, it becomes a billable table, and charges will apply based on the number of billable tables.

Try to avoid Autometrics! - On tables with many columns a large number of monitors might get deployed. This increases noise and cost. Instead, it is recommended to choose relevant metrics from the list of available metrics manually.

bigquery-etl and Bigeye

Monitors can be defined alongside derived datasets in bigquery-etl. Monitoring in Bigeye for a specific table can be enabled by adding monitoring metadata to the metadata.yaml file:

friendly_name: Some Table [warn]

monitoring:

enabled: true # Enables monitoring for the table in Bigeye and deploys freshness and volume metrics

collection: Test # An existing collection these monitors should be part of in Bigeye

Enabling monitoring for a table automatically deploys freshness and volume metrics for this table.

Bigeye monitors are triggered automatically via Airflow for queries that have monitoring set to enabled: true. The checks are executed after the ETL run for the table has been completed.

To indicate whether a failing check should block any downstream Airflow tasks, a [warn] or [fail] can be added to the name of the Bigeye metric. By default, all metrics that do not have either of those tags specified are considered as [warn]. These metrics won't be blocking any downstream Airflow tasks when checks fail, but any failing check will appear in the Bigeye dashboard. Metrics that have [fail] specified in their names will block the execution of downstream Airflow tasks in the event of a check failing.

Bigconfig

Additional and custom monitors can be defined in a Bigconfig bigconfig.yml file that is stored in the same directory as the table query. Bigconfig allows users to deploy other pre-defined monitors, such as row counts or null checks on a table or column level.

Custom SQL Rules

This is a temporary workaround until custom SQL rules are supported in Bigconfig, which is currently being worked on.

Custom SQL rules can be configured in a separate bigeye_custom_rules.sql file alongside the query. This file can contain various rules:

-- {

-- "name": "Fenix releases version format",

-- "alert_conditions": "value",

-- "range": {

-- "min": 0,

-- "max": 1

-- },

-- "collections": ["Test"],

-- "owner": "",

-- "schedule": "Default Schedule - 13:00 UTC"

-- }

SELECT

ROUND((COUNTIF(NOT REGEXP_CONTAINS(version, r"^[0-9]+\..+$"))) / COUNT(*) * 100, 2) AS perc

FROM

`{{ project_id }}.{{ dataset_id }}.{{ table_name }}`;

-- {

-- "name": "Fenix releases product check",

-- "alert_conditions": "value",

-- "range": {

-- "min": 0,

-- "max": 1

-- },

-- "collections": ["Test"],

-- "owner": "",

-- "schedule": "Default Schedule - 13:00 UTC"

-- }

SELECT

ROUND((COUNTIF(product != "fenix")) / COUNT(*) * 100, 2) AS perc

FROM

`{{ project_id }}.{{ dataset_id }}.{{ table_name }}`;

The SQL comment before the rule SQL has to be a JSON object that contains the configuration parameters for this rule:

name: the name of the SQL rule. Specify[warn]or[fail]to indicate whether a rule failure should block downstream Airflow tasksalert_conditions: one ofvalue(alerts based on the returned value) orcount(alerts based on whether the query returns rows)collections: list of collections this rule should be a part ofowner: email address of the rule ownerschedule: optional schedule of when this rule should be triggered. The rule will also get triggered as part of Airflowrange: optional range of allowed values when"alert_conditions": "value"

Deployment

To generate a bigconfig.yml file with the default metrics when monitoring is enabled run: bqetl monitoring update [PATH].

The created file can be manually edited. For tables that do not have a bigconfig.yml checked into the repository, the file will get generated automatically before deployment to Bigeye. Files only need to be checked in if there are some customizations.

The deployment of Bigconfig files runs automatically as part of the artifact deployment process, after tables and views have been deployed.

Bigeye - Further reading

-

Kinds of alert thresholds that Bigeye supports

-

Use Deltas to compare datasets and validate successful data replication, migration, or model code changes.

-

Row Creation - Concept of row creation time and how helps save costs and optimize your data observability

-

Creating a Metric Template in order to provide specific functionality.

-

Grouped metrics allow you to employ group by aggregation.

Data Modeling guidelines

This section contains guidelines to consider when implementing new data models.

It's also a collection of best practices related to different parts of the Data Modeling process, including the review of Pull Requests and techniques for performance improvements and cost reduction.

Where to Store our analytics data models

Mozilla’s current setup allows for the implementation of data modeling and business logic in different locations e.g BigQuery or Looker. The purpose of this document is to provide guidelines to decide where to store a new asset and to review pull requests that implement them.

For the purpose of this documentation, the analytics data model is defined as the set of data assets designed to collect meaningful data from our raw datasets and structure it for an efficient understanding and analysis of our products, business processes and events.

What to store in BigQuery datasets and the bigquery-etl repository

bigquery-etl is the repository for tools and also transformations and business logic that is stored in a BigQuery dataset (derived table, aggregate table, view, materialized view).

Some examples of logic expected in bigquery-etl:

- The calculation of core metrics: DAU, WAU, MAU, new profiles.

- Calculation of search metrics. E.g. Ad clicks, search with ads, organic search.

- Calculation of acquisition, retention and churn metrics.

- Mapping from partner code to platform for Bing revenue.

- Segmentation of clients that require the implementation of business logic, not just filtering on specific columns.

What to store in Looker

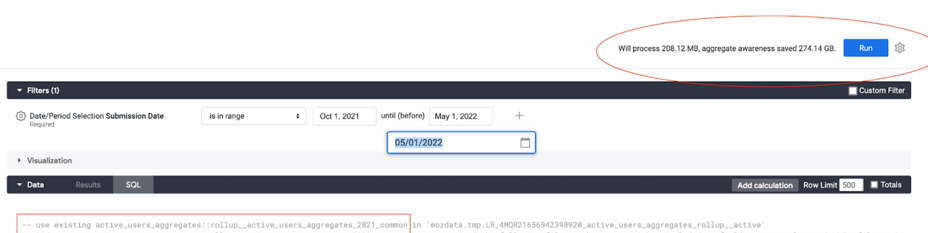

Data aggregations or aggregate awareness to improve performance, preferably that don't implement or replicate business logic.

Some examples:

- Aggregates for summarization or creating a subset from a BigQuery dataset, and that don’t include business logic. Some examples:

- A subset of data for a specific year. See this aggregate for data after 2019 in Looker.

- A subset of data with the most used dimensions. See this aggregate for specific dimensions and a time frame.

- An aggregate that covers a commonly used dashboard or view. See this aggregate to support the views that include a year over year analysis.

- Percentages, (e.g. in this view for Focus Android DAU, click through rates). These calculations are highly dependent on the dimensions and filters used and not always can be summed directly, therefore it is not recommended calculating them in BigQuery.

- Cumulative days of use. E.g. Implemented as a SUM in the Browsers KPIs view.

Using aggregates for cost saving and performance improvement

A good approach to better performance and reduced cost is reducing the amount of data scanned in queries, which can be achieved by summarizing and pre-calculating data in aggregates.

This doc is about when to use different options to aggregate data, their limitations, benefits, and examples.

- What are the options available?

- Important considerations:

- When to use each of these aggregates?

- How to measure the benefit and savings?

What are the options available?

BigQuery Aggregate Tables

Aggregates tables contain pre-aggregated results of scheduled queries running some business logic. These tables are created and maintained by the Data Team and scheduled to get updated periodically via Airflow.

BigQuery Materialized Views

These are views defined by the developer and then created, managed and incrementally updated by BigQuery, reading only the changes in the base table to compute results. Materialized view definitions do not support certain BigQuery features and expressions, such as UDFs, certain aggregate functions, backfilling or nesting. - There is a limit of 20 materialized views per table.

-

Example.

CREATE MATERIALIZED VIEW `moz-fx-data-shared-prod.monitoring_derived.suggest_click_rate_live_v1` OPTIONS (enable_refresh = TRUE, refresh_interval_minutes = 5) AS SELECT TIMESTAMP_TRUNC(submission_timestamp, minute) AS submission_minute, COUNT(*) AS n, COUNTIF(release_channel = "release") AS n_release, COUNTIF(release_channel = "beta") AS n_beta, COUNTIF(release_channel = "nightly") AS n_nightly, COUNT(request_id) AS n_merino, COUNTIF(request_id IS NOT NULL AND release_channel = "release") AS n_merino_release, COUNTIF(request_id IS NOT NULL AND release_channel = "beta") AS n_merino_beta, COUNTIF(request_id IS NOT NULL AND release_channel = "nightly") AS n_merino_nightly, FROM `moz-fx-data-shared-prod.contextual_services_live.quicksuggest_click_v1` WHERE DATE(submission_timestamp) > '2010-01-01' GROUP BY 1

Looker PDTs & aggregate awareness

These are aggregations that a developer defines in an Explore file (explore.lkml). From this definition, Looker creates a table in BigQuery's mozdata.tmp using the naming conventionscratch schema + table status code + hash value + view name and runs the scheduled update of the data.

Looker's PDTs and aggregate awareness are only referenced in Looker when at least one of the columns is used in a Looker object. These aggregates can be particularly beneficial to avoid having to rebuild dashboards after a schema change.

-

Template to create aggregate awareness in a Looker Explore, replacing the text inside <> with the actual values:

aggregate_table: <aggregate_name: Descriptive name of this aggregation.> { query: { dimensions: [<table>.<columns>] measures: [<table>.<columns>] filters: [<table>.<partition_column>: "<Period to materialize in the aggregate e.g. 2 years>"] } materialization: { sql_trigger_value: SELECT CURRENT_DATE() ;; increment_key: <table>.<partition_column> increment_offset: <INT: number of periods to update, recommended is 1.> } }

Important considerations

- Store the business logic in BigQuery, preferably in a client_id level table to aggregate from.

- All aggregates are version controlled in the git repositories

bigquery-etlandspoke-default. - All aggregates require a backfill or update when the source data changes:

- BigQuery aggregate tables are backfilled using the managed-backfill process.

- Materialized views cannot be backfilled, instead a materialized view needs to be re-created. Schema changes in base tables also invalidate the view and requires it to be re-created. Materialized views scan all historical data of their referenced base tables by default, so ensure to set a date filter to reduce the amount of data to be scanned.

- Add a date filter to materialized views to limit the amount of data to be scanned when these views get deployed initially. Otherwise, they will scan the entire data in referenced base tables.

- Looker PDTs require following the [protocol to backfill described in the Mozilla Looker Developers course.

- Indices, partition and clustering are allowed for all cases. Looker PDTs and aggregates require that these are defined in the base table.

- For Cost savings: BigQuery retries the update of materialized views after failures, which results in increased costs due to querying data multiple times.

- Monitor for broken materialized views in the BigQuery Usage Explore

- Use the command

bq cancelto stop unnecessary updates. E.g.bq --project_id moz-fx-data-shared-prod cancel moz-fx-data-shared-prod:US.<materialized view>. The permission to use this command is assigned to Data Engineering and Airflow.

When to use each of these aggregates?

A BigQuery aggregate table is suitable when:

- The query requires full flexibility to use DML, data types, aggregation functions and different types of JOIN.

- The results should not be unexpectedly affected by Shredder.

- The metric requires strict change control.

- A scheduled alert is required in case of failure or data out of sync. Airflow sends emails and alerts on failure for BigQuery aggregate tables, which are addressed daily by the Data Engineering team during the Airflow Triage.

- The table will be queried directly or used as a source for other analysis. Looker PDTs are not designed to be queried directly.

A Materialized View is suitable when:

- Your goal is to aggregate data in real-time (for example, for implementing real-time monitoring of certain metrics).

- The results can be based on shredded data (tables with client_id).

- The view will be queried directly or used as a source for other analysis.

- Change control is not required or is already implemented in the base table. This can be verified by looking for the label

change_controlled: truein the table's metadata. - A scheduled alert on failure is not required. Failures must be actively monitored in the BigQuery Usage Explore.

- The metric does not require non-deterministic functions that are not supported: RAND(), CURRENT_TIMESTAMP, CURRENT_DATE(), or CURRENT_TIME().

- The query does not require UDFs, UNNESTING arrays, COUNT DISTINCT, ORDER BY or any DML operation different from SELECT.

- The query uses a WITH clause, COUNTIF, INNER JOIN or TIMESTAMP_ADD. These are all supported.

- The data does not need to be backfilled.

- When considering materialized views, a common practice is to use a combination of an aggregate table to store historical data (e.g. older than 2 days) and use a materialized view to track data in real-time (e.g. aggregate data that is just coming in). This allows to run backfills on the aggregate table.

A Looker PDT is suitable when:

- Your goal is to improve the performance and query response in dashboards by aggregating data using common query patterns, with the added benefit of not having to re-create the dashboard every time the base table changes.

- The results can be based on shredded data (tables with client_id).

- Change control is not required or is already implemented in the base table. This can be verified by looking for the label

change_controlled: truein the metadata. - A scheduled alert on failure is not required. Failures must be monitored in the PDT Admin Dashboard or in the Errors and Broken Content Dashboard.

- The metrics defined in the Explore use only any of these aggregations: SUM, COUNT, COUNTIF, MIN, MAX or AVERAGE.

- The metrics defined in the Explore use only any of these data types: NUMBER, DATE, STRING or YESNO.

- The aggregate uses a DISTINCT COUNT and the query matches exactly the Explore query.

- The base table for the Explore is expected to change with added columns and Looker Explore will require modifications that also require re-creating the dashboards. When using aggregate awareness this re-create is not neccesary.

How to measure the benefit and savings?

-

Looker displays the amount of data that will be processed with and without using the aggregates, in the top right corner of a view or explore when in development mode.

-

BigQuery displays also in the top right corner of the window, the amount of data that will be scanned by a query written in the console.

-

Alternatively, query the information schema to return the bytes processed and cost. With this information is possible to compare and calculate the savings that result from using an aggregate instead of querying the base table directly. Using sample data for the comparison will save costs.

SELECT destination_table.project_id AS project_id, destination_table.dataset_id AS dataset, SUBSTR(destination_table.table_id, 0, INSTR(destination_table.table_id, '$') -1) AS table_id, SUM(total_bytes_processed/(1024*1024*1024)) as TB_processed, SUM((total_slot_ms * 0.06) / (60 * 60 * 1000)) AS cost FROM `moz-fx-data-shared-prod`.`region-us`.INFORMATION_SCHEMA.JOBS_BY_PROJECT WHERE EXTRACT(DATE FROM creation_time) BETWEEN <ini_date> AND CURRENT_DATE AND destination_table.dataset_id = <dataset_name> AND user_email = <user_email> AND destination_table.table_id = <table_name> GROUP BY ALL;

Shredder mitigation process

Running a backfill on aggregate tables at Mozilla comes with the high risk of altering reported metrics due to the effects of shredder. It is essential for the business to mitigate these risks while still allowing the addition and modification of columns in aggregate tables to enhance analytic capabilities or propagate changes in business definitions. At the same time, this mitigation must happen in alignment with Mozilla data protection policies by preventing aggregate tables from maintaining data that could be used to uniquely identify shredded clients.

Shredder mitigation is a process that breaks through this risk by securely executing backfills on aggregate tables. It recalculates metrics using both existing and new data, mitigating the impact of shredder and propagating business definition changes while maintaining data integrity over time.

This documentation provides step-by-step guidance with examples and common scenarios, to help you achieve consistent results.

For context and details on previous analysis and discussions that lead to this solution, refer to the proposal.

- When to use this process

- How this process transforms your workflow

- What to expect after running a backfill with shredder mitigation

- Key considerations before using shredder mitigation for the first time

- Running a managed backfill with shredder mitigation

- Troubleshooting scenarios and guidelines

- Examples for First-Time and subsequent runs

- Data validation

- FAQ

When to use this process

Shredder mitigation should be used to backfill aggregate tables when business requirements call for metrics to remain consistent and unchanged over time.

Some examples of aggregates where this process is applicable are:

- KPI and OKR aggregates.

- Search aggregates.

Use cases

- Add a new dimension or metric to an aggregate.

- Propagate upstream changes to an existing dimension or metric in the aggregate.

How this process transforms your workflow

- With this process, you can now safely backfill aggregate tables, which previously carried high risks of affecting KPIs, metrics or forecasting.

Now, it's straightforward: Create a managed backfill with the

--shredder-mitigationparameter, and you're set! - The process automatically generates a query that mitigates the effect of shredder and which is automatically used for that specific backfill.

- Clearly identifies which aggregate tables are set up to use shredder mitigation.

- The process automatically generates and runs data checks to validate after each partition backfilled that all rows match both versions. It will terminate in case of mismatches to avoid unnecessary costs.

- Prevents an accidental backfill with mitigation on tables that are not set up for the process.

- Supports the most common data types used in aggregates.

- Provides a comprehensive set of informative and debugging messages, especially useful during first-time runs where many columns may need updating.

- Each process run is documented, along with its purpose.

What to expect after running a backfill with shredder mitigation

- A new version of the aggregate table is available, incorporating all new added or modified columns.

- Totals for each column remain stable.

- Subtotals are adjusted for modified or newly added columns, with

NULLvalues increasing by the amount corresponding to the shredded client IDs.

Key considerations before using shredder mitigation for the first time

-

This process ensures it is triggered only for tables set up for this type of backfill, which you can accomplish by ensuring that:

GROUP BYis explicit in all versions of the query, avoiding expressions likeGROUP BY ALL,GROUP BY 1, 2.- All columns have descriptions that if applicable, include the date when new columns become available.

- The query and schema don't include id-level columns.

- The table metadata includes the label

shredder_mitigation: true.

-

Metrics totals will only match if all columns with upstream changes are processed in addition to your own modifications, which can be achieved by renaming any column with upstream or local changes yet to be propagated, both in query and schema.

-

Metrics totals for

NULLdata will only match if the same logic forNULLis applied in all versions of the query. Particularly,countryandcityare columns whereNULLvalues are usually set to'??'. -

It is recommended to always run the process on a

small period of timeor a subset of data to identify potential mismatches and required changes before executing a complete backfill.

Columns with recent changes that may require propagation to aggregates

first_seen_dateandfirst_seen_yearwhere the business logic for Firefox Desktop changed in 2023-Q4 to integrate data from 3 different pings.segmentwhere the business logic for all browsers changed in 2024-H1 to integrate the DAU definition to the segmentation of clients.os_versionwhere the logic now integrates thebuild_versionfor Windows operating systems.dau,wauandmauwhere the business logic changed in 2024-H1 with new qualifiers.

Running a managed backfill with shredder mitigation

The following steps outline how to use the shredder mitigation process:

- Bump the version of the aggregate query.

- Make the necessary updates to the new version of the query and schema:

- Include new columns in the query with their corresponding description in the schema and the date when they become available, if relevant.

- Update existing columns and rename them in the file to ensure they are also recalculated. If you need to avoid changes in Looker, set the expected column name in the view.

- Use a managed backfill for the new query version, with the

--shredder_mitigationparameter.

That's all! All steps are complete.

Troubleshooting scenarios and guidelines

This section describes scenarios where mismatches in the metrics between versions could occur and the recommended approach to resolution.

-

Dimensions with upstream changes that haven't propagated to your aggregate and show metrics mismatches between versions after the backfill. Follow these steps to resolve the issue

- Run a backfill with shredder mitigation for a small period of time.

- Validate the totals for each dimension and identify any columns with mismatches. Investigate whether the column had upstream changes and if so, rename it to ensure it is recalculated in your aggregate. Refer to the list of known columns with recent changes above for guidance.

- Check the distribution of values in columns with mismatches and identify any wildcards used for NULL values. Then apply the same logic in the new query.

-

The process halts and returns a detailed failure message that you can resolve by providing the missing information or correcting the issue:

- Either the previous or the new version of the query is missing.

- The metadata of the aggregate to backfill does not contain the

shredder_mitigation: truelabel. - The previous or new version of the query contains a

GROUP BYthat is invalid for this process, such asGROUP BY 1, 2orGROUP BY ALL. - The schema is missing, is not updated to reflect the correct column names and data types, or contains columns without descriptions.

-

The sql-generated queries are not yet supported in managed backfills, so run

bqetl query generate <name>in advance for this case.

Examples for First-Time and subsequent runs

This section contains examples both for first-time and subsequent runs of a backfill with shredder mitigation.

Let's use as an example a requirement to modify firefox_desktop_derived.active_users_aggregates_v3 to:

- Update the definition of existing column

os_version. - Remove column

attributed. - Backfill data.

After running the backfill, we expect the distribution of os_version to change with an increase in NULL values. Additionally, column attributed will no longer be present, and the totals for each column will remain unchanged.

Example 1. Regular runs

This example applies when the active_users_aggregates_v3 already has the label shredder_mitigation and upstream changes propagated, which makes the process as simple as:

- Bump the version of the table to

active_users_aggregates_v4to implement changes in these files. - Remove column

attributedand update the definition ofos_versionin both query and schema. Renameos_versiontoos_version_buildto ensure that it is recalculated. The schema descriptions remain valid. - Follow the managed backfill process using the

--shredder_mitigationparameter.

And it's done!

Example 2. First-Time run

This example is for a first-run of the process, which requires setting up active_users_aggregates_v3 and further versions appropriately, also propagating upstream changes.

Customize this example to your own requirements.

Initial analysis

The aggregate table active_users_aggregates_v3 contains Mozilla reported KPI metrics for Firefox Desktop, which makes it a suitable candidate to use the shredder mitigation process.

Since this is the first time the process runs for this table, we will follow the Considerations before using shredder mitigation for the first time above.

Preparation for the backfill

In preparation for the process, the first step is to get the table active_users_aggregates_v3 ready for this type of backfill. Subsequent versions that will use shredder mitigation should also follow these standards, in our case active_users_aggregates_v4.

We need these changes:

- Replace

GROUP BY ALLwith the explicit list of columns in both versions of the query. - Replace the definition of

citytoIFNULL(city, '??') AS cityfor consistent logic in query versions and data. - Add descriptions to all columns in the schema.

- Include the label

shredder-mitigation: truein the metadata of the table. - Merge a Pull Request to apply these changes.

Changes related to the requirement

- Bump the version of the table to

active_users_aggregates_v4and make changes to the new version files. - Since

os_versionis already present in the table, we need to update its definition and also rename it toos_version_buildboth in query and schema to ensure that it is recalculated. The schema description remains valid. - Remove column

attributedfrom the query and schema.

Changes to update columns with upstream changes yet to be propagated:

- Existing column

first_seen_yearis renamed tofirst_seen_year_newandsegmentis renamed tosegment_dau, as both have upstream changes. - Merge a PR to apply all changes.

Running the backfill:

- Follow the managed backfill process using the

--shredder_mitigationparameter.

Data validation

Automated validations

The process automatically generates data checks using SELECT EXCEPT DISTINCT to identify:

- Rows in the previous version of the data that are missing in the newly backfilled version which either have mismatches in metrics or are missing completely.

- Rows in the backfilled version that are not present in the previous data which either have mismatches in metrics or have been incorrectly added by the process.

The command 'EXCEPT DISTINCT' performs a 1:1 comparison by checking both dimensions and metrics which ensures a complete match of rows between both versions.

These data checks run after each partition backfilled and the process will terminate in case of mismatches to avoid unnecessary costs.

Recommended validations

Before completing the backfill, it is recommended to validate the following, along with any other specific validations that you may require:

- Metrics totals per dimension match those in the previous version of the table.

- Metric sub-totals for the new or modified columns match the upstream table. Remaining subtotals are reflected under NULL for each column.

- All metrics remain stable and consistent.

The auto-generated checks are written to the query folder. Use them to retrieve all rows when there are mismatches.

When comparing subtotals per column, ensure you use COALESCE for an accurate comparison of NULL values, and verify that all values match the upstream sources, except for NULL which is expected to increase.

FAQ

-

Can I run this process to update one column at a time and still achieve the same result?

Yes, the process allows you to add one column at a time, as long as all columns with upstream changes have been properly propagated to your aggregate table. However, this is not the recommended practice as it will lead to multiple table versions and reprocessing data several times, increasing costs. On the plus side, it may facilitate rollback of single changes if needed, so use your best criteria.

-

After following the guidelines I still have a mismatch. Where can I get for help?

If you need assistance, have suggestions to improve this documentation, or would like to share any recommendations, feel free to reach out to the Analytics Engineering team or post a message in #data-help!

In 2020, Mozilla chose Looker as its primary tool for analyzing data. It allows data exploration and visualization by experts and non-experts alike.

This section provides an introduction to Looker as well as some tutorials on how to use it.

Introduction to Looker

In 2020, Mozilla chose Looker as its primary tool for analyzing data. It allows data exploration and visualization by experts and non-experts alike.

Access to Looker is currently limited to Mozilla employees and designated contributors. For more information, see gaining access.

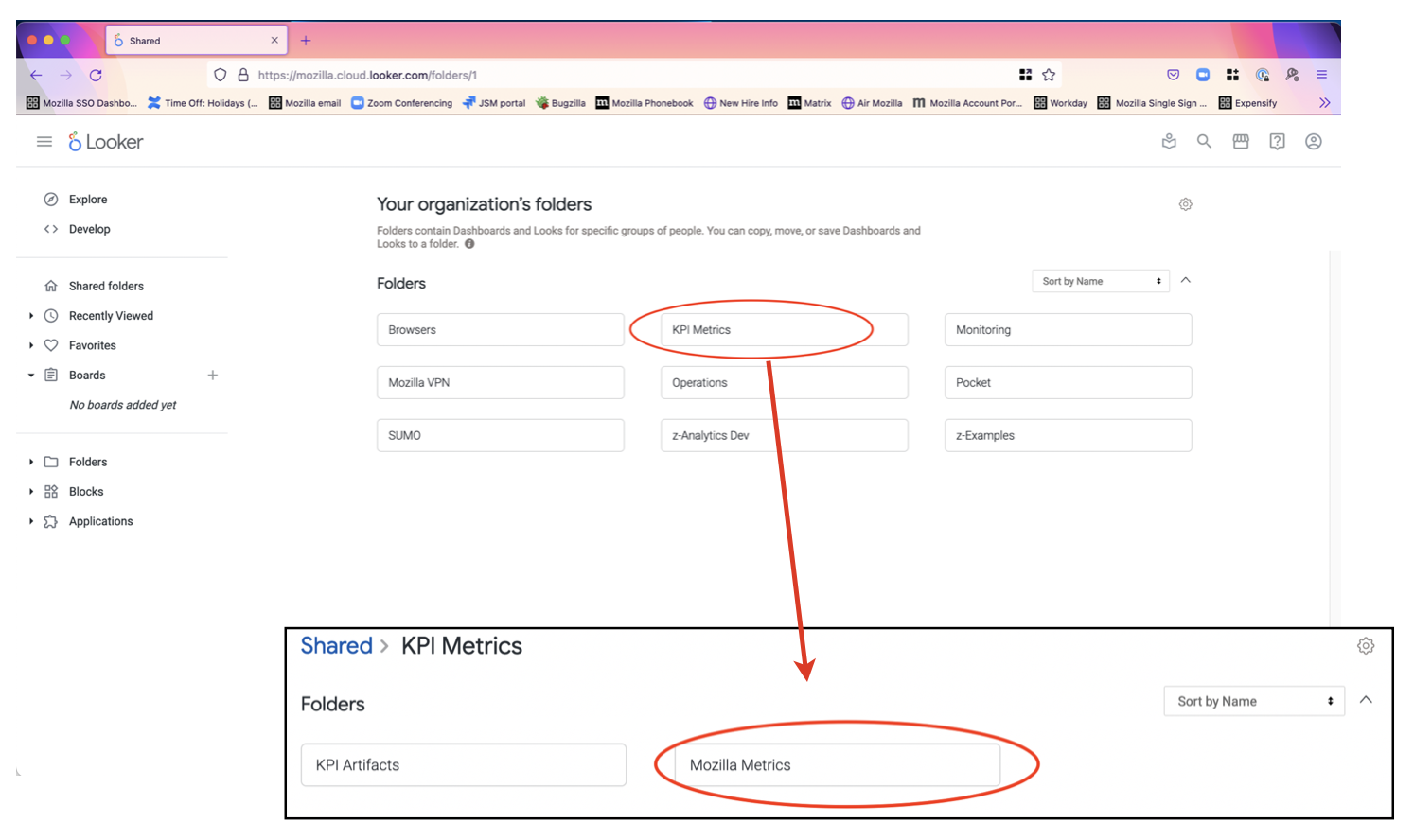

Table of Contents

Accessing Looker

You can access Mozilla's instance of Looker at mozilla.cloud.looker.com.

Getting Started

Front page

By default, on the front page you will see a list of default folders, which contain links to dashboards. These are organized by project. Of particular note is the KPI Metrics Folder, which includes several Data-produced and vetted dashboards like the Firefox Corporate KPI Dashboard.

Explores

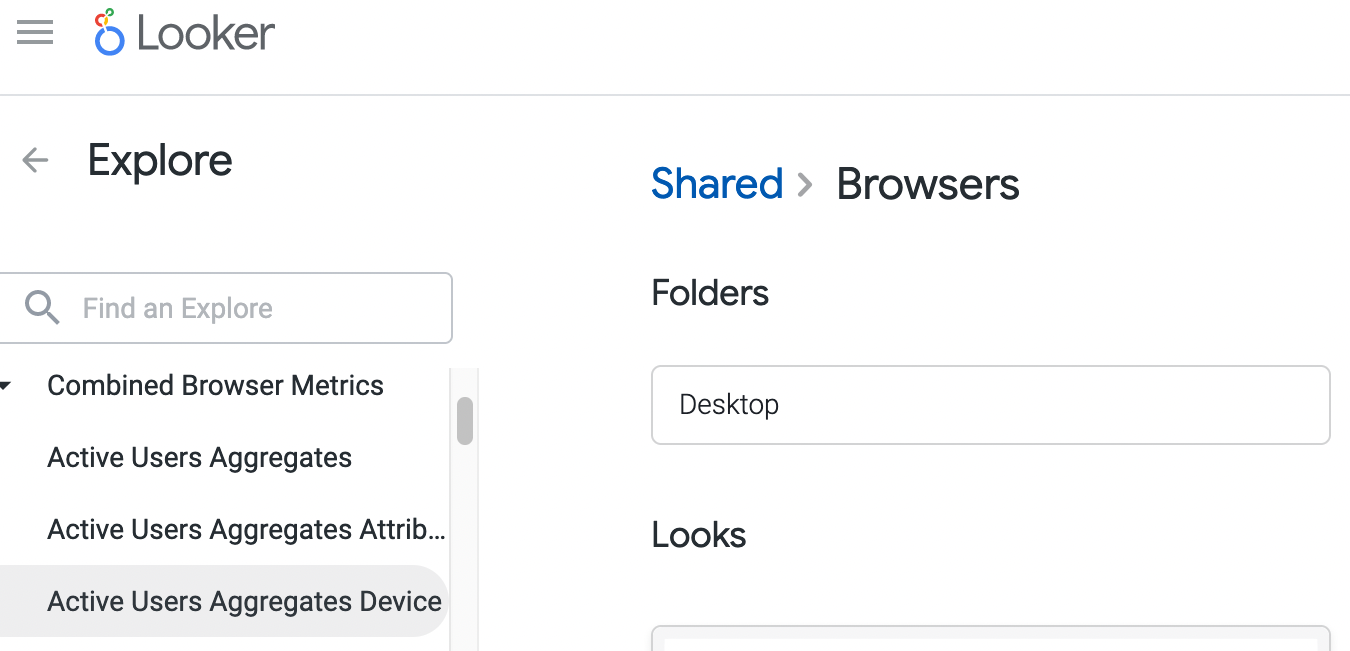

One of the core concepts of Looker are Explores. These allow you to quickly explore datasets (both ping-level and derived datasets) within an intuitive user interface.

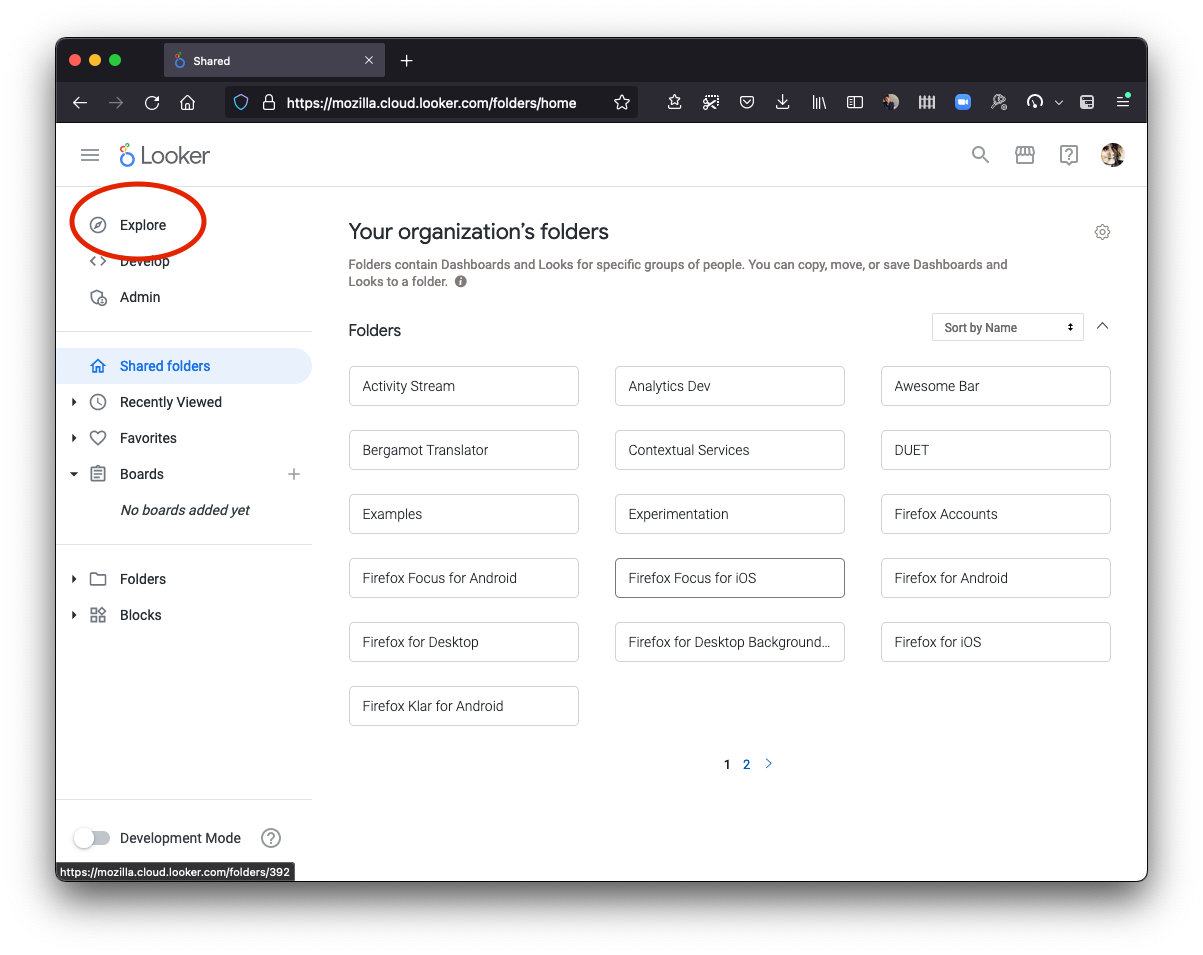

You can access the full list of explores available in Looker. From the main page on the left, select "Explore". From there, you can select an explore to view. Most explores are grouped by application. For example, there are a set of explores for both "Firefox Desktop" and "Firefox for Android".

Using the Glean Dictionary with Looker

The above list of explores can sometimes be overwhelming. If your application uses Glean to collect data, one very viable workflow is to look up information on the metric(s) you're interested in using the Glean Dictionary, then use the "Access" section at the bottom, which links directly out to the Looker explore(s) where you can access the data.

The following video demonstrates this workflow in detail:

Going Deeper

If you want to explore Looker more deeply, you can check out:

- "BI and Analytics with Looker" training hub: A collection of self-paced video training courses for new users. Full courses are free, but require registration, but course descriptions contain material that is useful on its own.

- Looker Documentation: Extensive text and video documentation, a “textbook” reference on how the product works.

- Looker Community has customer-written material, broadcasts from Looker employees (primarily release notes), and topics written by Looker employees that are not officially supported by Looker.

You can find additional Looker training resources on the Looker Training Resources mana page (LDAP access required).

Normalizing Country Data

This how-to guide is about getting standard country data in your Looker Explores, Looks and Dashboards:

This guide has only two steps: Normalizing Aliases and Accessing Standard Country Data.

⚠️ Some steps in this guide require knowledge of SQL and LookML - ask in #data-help for assistance if needed.

We get country data from many sources: partners, telemetry, third-party tools etc. In order to analyze these in a standard way, i.e. make different analyses comparable, we can conform these sources to a set of standard country codes, names, regions, sub-regions, etc.

Step One - Normalizing Aliases

⚠️ If your country data already consists of two-character ISO3166 codes, you can skip to Step Two!

We refer to a different input name for the same country as "alias". For example, your data might contain the country value "US", another might contain "USA" and yet another might contain "United States", etc. This can be confusing when read in a table or seen on a graph.

To normalize this, we maintain a mapping of aliases from each country to its two-character ISO3166 code This includes misspellings and alternate language encoding that we encounter in various datasets. For example:

CI:

- "Ivory Coast"

- "Côte d’Ivoire"

- "Côte d'Ivoire"

- "Cote d'Ivoire"

- "Côte d’Ivoire"

- "The Republic of Côte d'Ivoire"

To map (normalize) your input alias to its country code, add a LEFT join from your table or view to the alias country_lookup

table: mozdata.static.country_names_v1. For example:

SELECT

...

your_table.country_field,

COALESCE(country_lookup.code, your_table.country, '??') as country_code

...

FROM

your_table

LEFT JOIN mozdata.static.country_names_v1 country_lookup ON your_table.country_field = country_lookup.name

Note: we use ?? as a country-code for empty country data from data sources. This will map to "Unknown Country",

"Unknown Region", etc.

At this point, you should check for cases where the resulting country_code matches your_table.country but does

not match any values in the country_lookup table - you may have discovered a new alias, in which case please add it to the list!

You can do this via a bigquery-etl pull request for example: https://github.com/mozilla/bigquery-etl/pull/2858.

⚠️ This list of aliases is public. If you are working with sensitive data, please do not add to the public list of aliases, you should handle it in custom logic in code that interfaces with your sensitive data for example in private-bigquery-etl or the private Looker spoke.

If you are satisfied that the country_code field is appropriately normalized, move on to Step Two!

Step Two - Accessing Standard Country Data

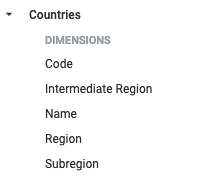

Standard country data is contained in the mozdata.static.country_codes_v1 table and by extension the

shared/views/countries Looker View.

Add the following join to your Explore (either in the .explore.lkml or .model.lkml file):

include: "/shared/views/*"

...

join: countries {

type: left_outer

relationship: one_to_one

sql_on: ${your_table.country_code} = ${countries.code} ;;

}

Now, you should be able to see the Countries View in your Explore 🎉

Normalizing Browser Version Data

This how-to guide is about getting numerical browser version data in your Looker Explores, Looks and Dashboards:

This guide only has one step: Normalizing Version Strings

⚠️ Some steps in this guide require knowledge of SQL - ask in #data-help for assistance if needed.

Many of our data sources (particularly browser telemetry) have version_id's: A string that (most of the time)

looks like "99.1.0" in the format "major.minor.patch".

⚠️ In SQL you might be tempted to compare these version identifiers. This might however, return misleading results!

"99" > "100"but99 < 100. Note the string vs number comparison.

Step One - Normalizing Version Strings

In your view.sql file, locate the browser version identifier. In many tables/views, this is called app_version.

To extract the numerical version data you have two options:

1. The truncate version UDF - truncate_version

This extracts the major or minor version from the version identifier. See the Mozfun Docs for a detailed description.

Modify your view.sql:

CREATE OR REPLACE VIEW

`project.dataset.view`

AS

SELECT

*,

`mozfun.norm.truncate_version`(app_version, "major") as major_browser_version -- <--- New Line

FROM

`project.dataset_derived.table`

major_version will be added as a new field containing the numerical major browser version.

2. The browser version info UDF - browser_version_info

This extracts a number of useful fields from the version identifier. See the Mozfun Docs for a detailed description.

Modify your view.sql:

CREATE OR REPLACE VIEW

`project.dataset.view`

AS

SELECT

*,

`mozfun.norm.browser_version_info`(app_version) as browser_version_info -- <--- New Line

FROM

`project.dataset_derived.table`

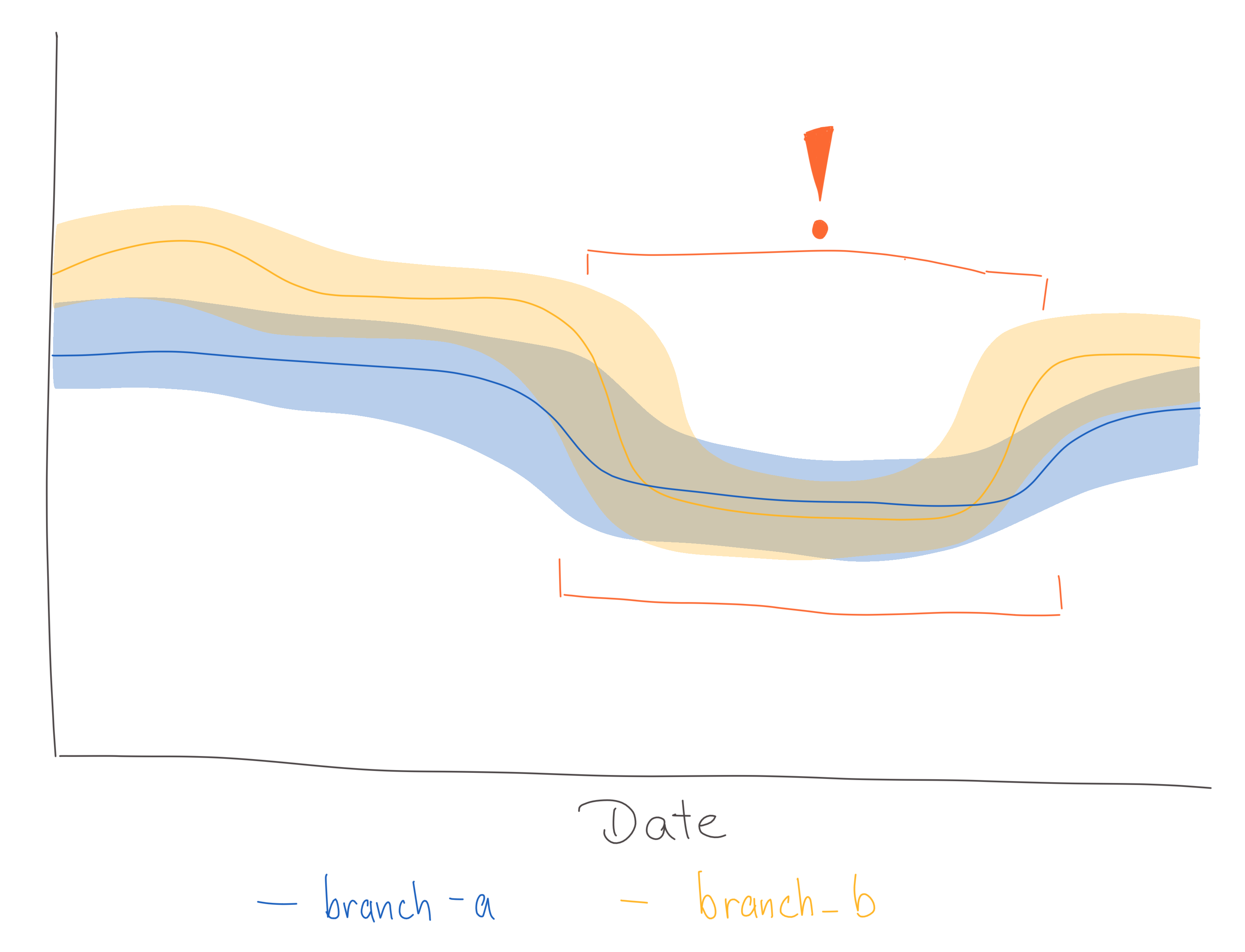

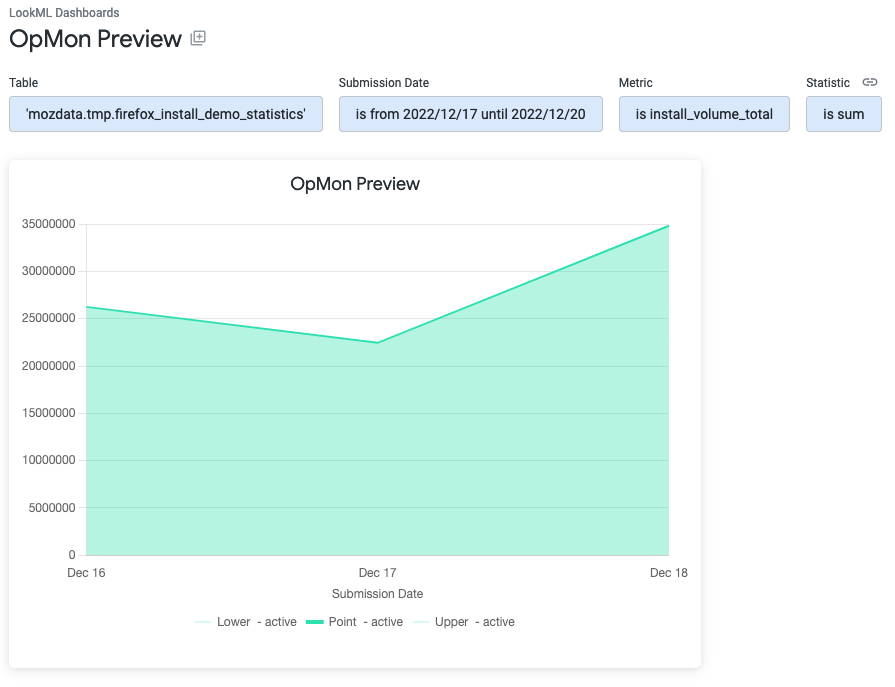

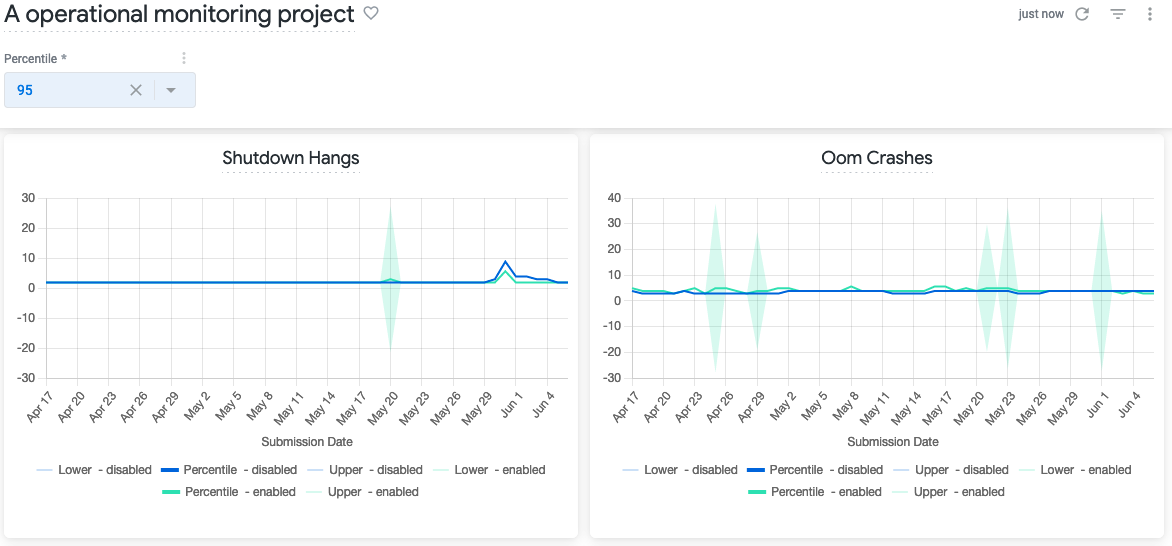

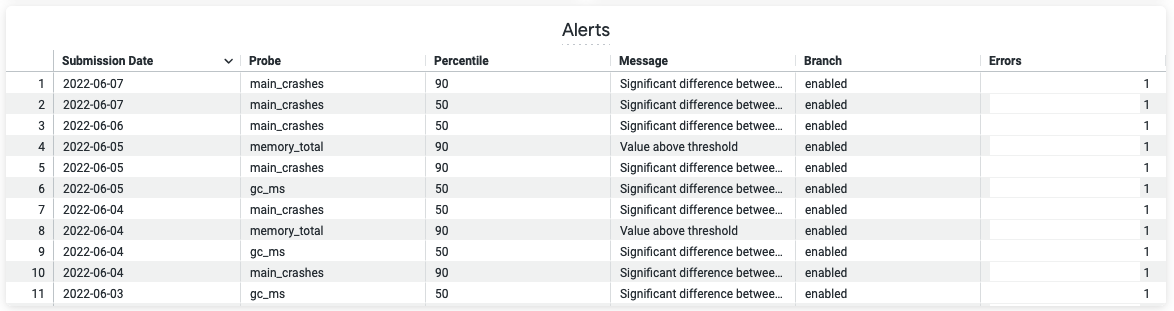

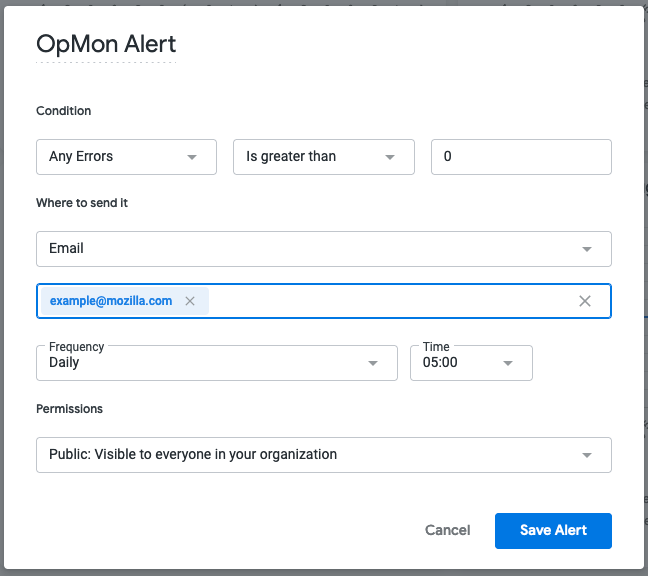

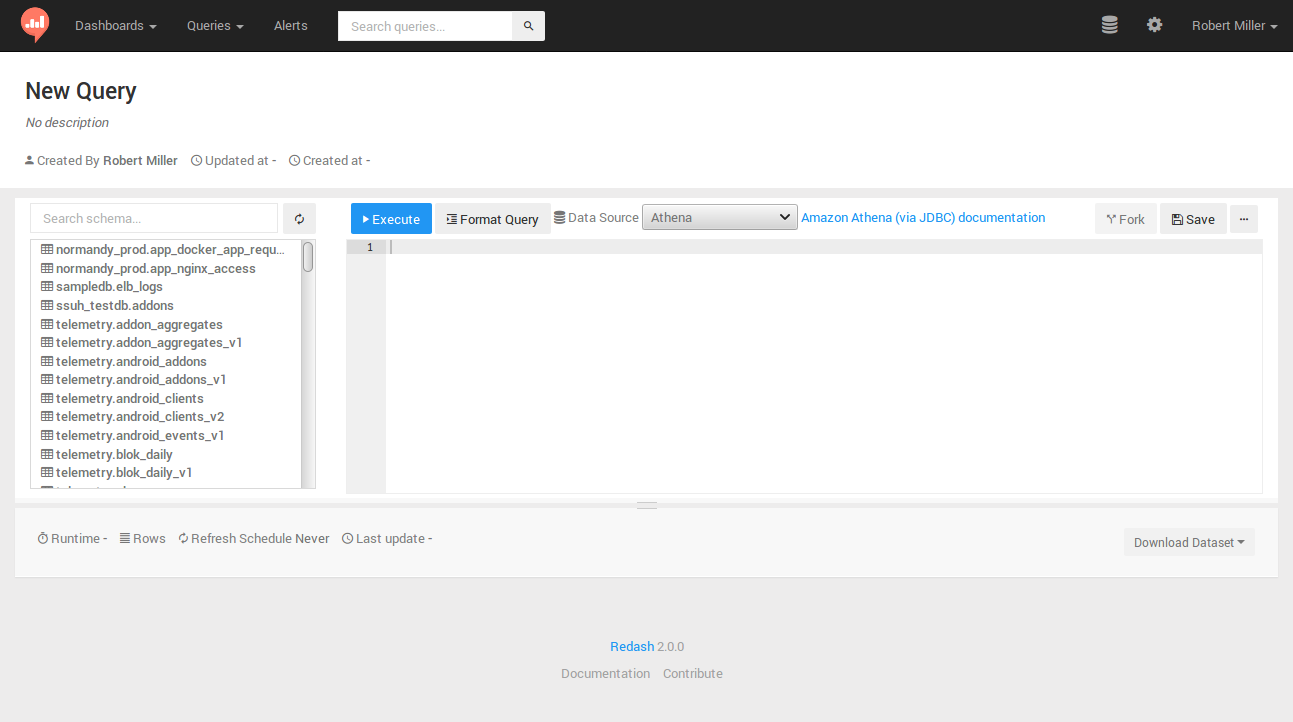

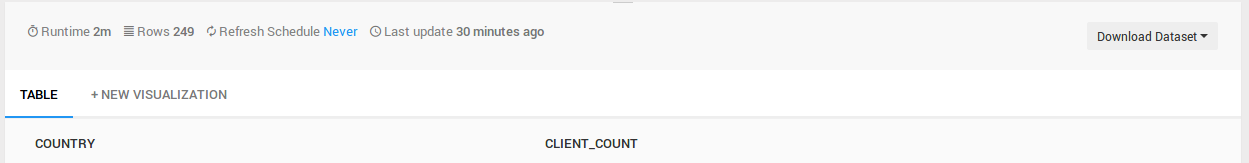

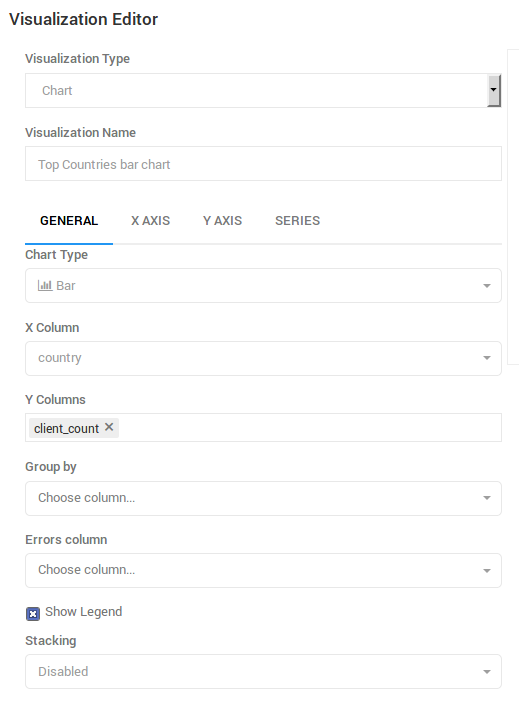

browser_version_info will be added as a new struct field containing numerical version fields and other useful metadata.